Hi, I am testing the single layer BiLSTM. My expectation is that it should learn.

This is the model:

class LSTMModel(nn.Module):

def __init__(self, input_dim, hidden_dim, layer_dim, output_dim):

super(LSTMModel, self).__init__()

self.hidden_dim = hidden_dim

self.directions = 2

self.layer_dim = layer_dim

self.lstm = nn.LSTM(input_dim, hidden_dim, layer_dim, batch_first=True, bidirectional=True)

self.fc = nn.Linear(hidden_dim, output_dim)

def forward(self, x):

batch, seq_len, input_size = x.shape

h0 = torch.zeros(self.layer_dim*self.directions, batch, self.hidden_dim)

c0 = torch.zeros(self.layer_dim*self.directions, batch, self.hidden_dim)

out, (hn, cn) = self.lstm(x, (h0, c0))

out = out.view(batch, seq_len, self.directions, self.hidden_dim)

fwd = out[:, :, 0, :]

bwd = out[:, :, 1, :]

out = fwd + bwd

out = self.fc(out)

return out

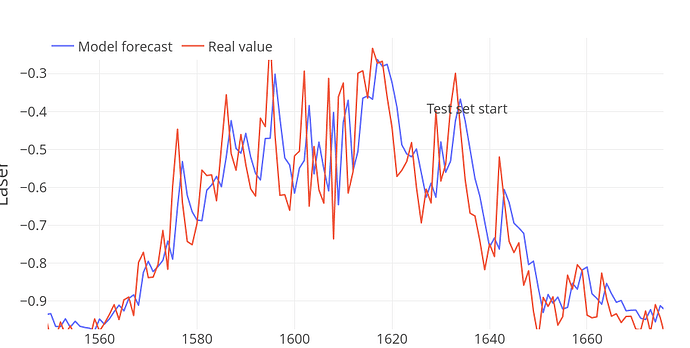

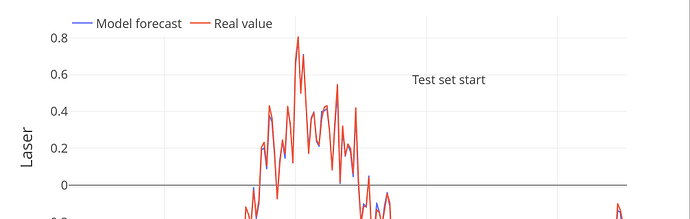

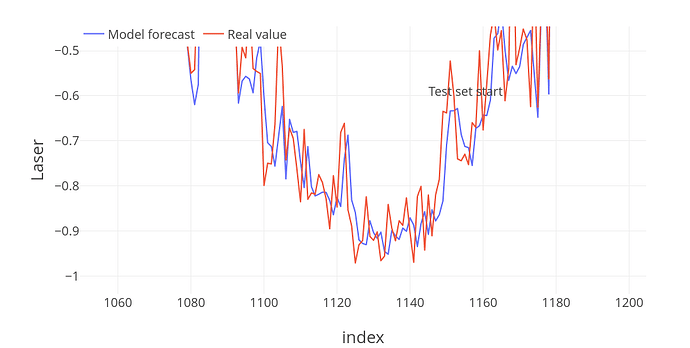

The result is quite disappointing, it tries to follow the last step:

What is wrong?

The notebook is available here: