I have been working on this project for over a month now. However, I couldn’t make it work yet. So, I need help.

It is basically applying the probabilistic UNet paper on the leaf segmentation dataset.

Given an image, I want to output one instance at a time by sampling from a distribution.

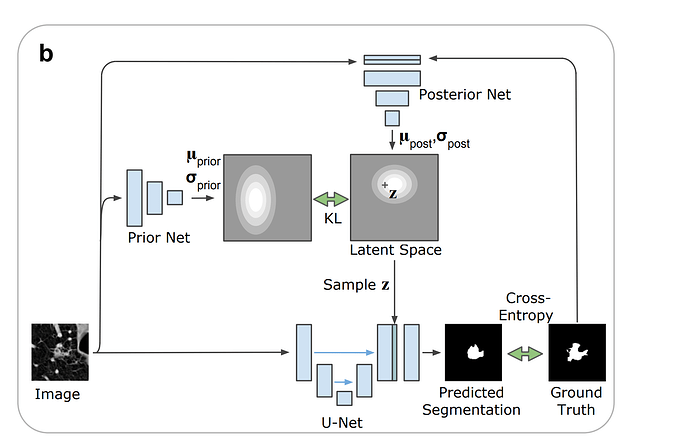

The probabilistic UNet architecture is shown below.

The architecture has two loss components; the KL divergence and reconstruction loss. When I train it, the KL loss goes to zero, however the reconstruction loss works differently that what I want.

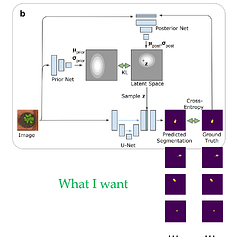

What I want:

- Sample a noise

- Get one instance

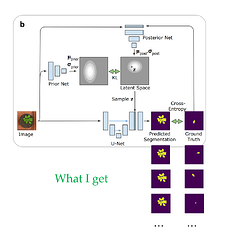

What actually happens is that the noise is ignored it acts like a semantic segmentation. Basically, the RGB image and the different instances are mapped to the same latent space. I have tried beta scheduling KL, and even removed the KL term, but the noise is still ignored.

Can someone be kind enough to review my code here or explain why this approach might not work?

Thank you.