Hello all,

Recently, I’m trying to deploy my model in a real environment. The model is a Fater-RCNN based object recognition model, as proposed by Anderson et al Bottom-up-attention. The model is implemented with Detectron.

The first try was with a web service (Flask plus Redis Queue), which works but with delays due to connection and transition issues. Therefore, an efficient solution was wished.

The second try is to export the PyTorch model and use it in the JAVA application, leveraged by DJL or DL4J. For that, I need to export the model as a ONNX graph at first. Here is the work I done as so far.

import onnxruntime as ort

import numpy as np

import torch

import onnx

import cv2

// Initialize model with checkpoint

model = BoAModel()

model.eval()

// Load image

cv_image = cv2.imread(image_path)

// Transformation, transform_gen () is used to resize the image

image, transforms = T.apply_transform_gens(transform_gen, cv_image)

// Model input

dataset_dict = [torch.as_tensor(image.transpose(2, 0, 1).astype("float32"), device="cpu"), torch.as_tensor([float(image_shape[0]) / float(h)], device="cpu")]

// Export onnx

model_onnx_path = "torch_model_boa_jit_torch1.5.1.onnx"

batch_size = 1

torch.onnx.export(model=model, # model to be exported

args= data, # model input

f=model_onnx_path, # where to save the model

export_params=True, # store the trained parameter weights inside the model file

opset_version=11, # the ONNX version to export the model to

do_constant_folding=False, # whether to execute constant folding for optimization

input_names=['input'], # the model's input names

output_names=['output'], # the model's output names

dynamic_axes={'input': {0: 'batch_size'},

'output':{0: 'batch_size'}},

verbose=True)

By exporting, there were a couple of warnings that have been asked and googled. It is not critical as so far in my case. After the exporting, I had a sanity check, as shown as follows:

# Load the ONNX model

model = onnx.load(model_onnx_path)

# Check that the model is well formed

onnx.checker.check_model(model)

# Print a human readable representation of the graph

print(onnx.helper.printable_graph(model.graph))

So far so good. So I tried to load it in ONNX Runtime with the following codes:

sess_options = ort.SessionOptions()

# Below is for optimizing performance

sess_options = ort.SessionOptions()

# sess_options.intra_op_num_threads = 24

# ...

ort_session = ort.InferenceSession(model_onnx_path, sess_options=sess_options)

def to_numpy(tensor):

return tensor.detach().cpu().numpy() if tensor.requires_grad else tensor.cpu().numpy()

# Compute ONNX Runtime output prediction (use the same data that model works well)

ort_inputs = {ort_session.get_inputs()[0].name: [to_numpy(torch.unsqueeze(torch.as_tensor(image.transpose(2, 0, 1).astype("float32"), device="cpu"), dim=0)), [torch.as_tensor(1.6)]]}

ort_outs = ort_session.run(None, ort_inputs)

print("ort_outs", ort_outs)

Then I got a ONNXRuntime Error at line (ort.InferenceSession(model_onnx_path,):

onnxruntime.capi.onnxruntime_pybind11_state.Fail: [ONNXRuntimeError] : 1 : FAIL : Load model from torch_model_boa_jit_torch1.5.1.onnx failed:Node (Gather_346) Op (Gather) [ShapeInferenceError] axis must be in [-r, r-1]

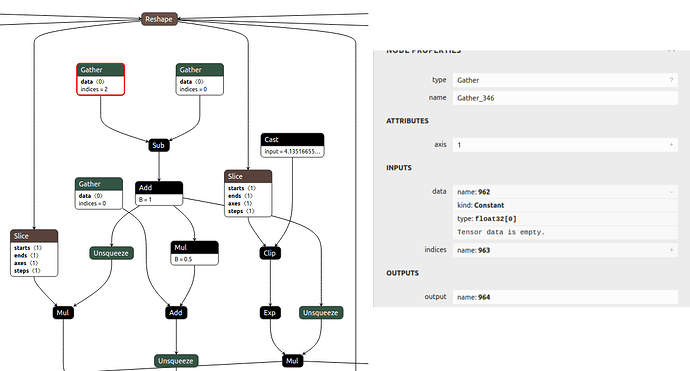

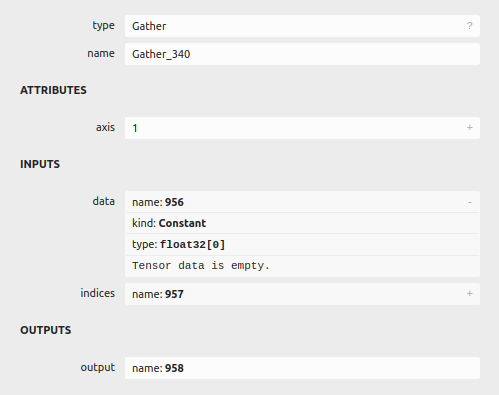

As the error shows, there was a problem at node Gather_346, it is shown in the following figure:

I’m not quite sure whether there was an error at this step (e.g., Tensor data is empty in the figure). I check the verbose log of export. This Gather operation was given in box_regression (at line widths = boxes[:, 2] - boxes[:, 0] + OFFSET, where the box prediction will be calculated based on proposed regions and anchors, as shown as below:

# torch.onnx.export log

%964 : Float(22800) = onnx::Gather[axis=1](%962, %963) # /media/WorkSpace/Development_Repository/Working/models/boa/box_regression.py:169:0

%965 : Float(0) = onnx::Constant[value=[ CPUFloatType{0} ]]()

%966 : Tensor = onnx::Constant[value={0}]()

%967 : Float(22800) = onnx::Gather[axis=1](%965, %966) # /media/WorkSpace/Development_Repository/Working/models/boa/box_regression.py:169:0

%968 : Float(22800) = onnx::Sub(%964, %967) # /media/WorkSpace/Development_Repository/Working/models/boa/box_regression.py:169:0

The codes where the errors (Gather_346) might be triggered possibly are as follows:

def apply_deltas(self, deltas, boxes):

"""

Apply transformation `deltas` (dx, dy, dw, dh) to `boxes`.

Args:

deltas (Tensor): transformation deltas of shape (N, k*4)

boxes (Tensor): boxes to transform, of shape (N, 4)

"""

assert torch.isfinite(deltas).all().item(), "Box regression deltas become infinite or NaN!"

boxes = boxes.to(deltas.dtype)

print("apply_deltas boxes.shape", boxes.shape)

OFFSET = 1

widths = boxes[:, 2] - boxes[:, 0] + OFFSET

heights = boxes[:, 3] - boxes[:, 1] + OFFSET

ctr_x = boxes[:, 0] + 0.5 * widths

ctr_y = boxes[:, 1] + 0.5 * heights

wx, wy, ww, wh = self.weights

dx = deltas[:, 0::4] / wx

dy = deltas[:, 1::4] / wy

dw = deltas[:, 2::4] / ww

dh = deltas[:, 3::4] / wh

# clamping too large values into torch.exp()

dw = torch.clamp(dw, max=self.scale_clamp)

dh = torch.clamp(dh, max=self.scale_clamp)

pred_ctr_x = dx * widths[:, None] + ctr_x[:, None]

pred_ctr_y = dy * heights[:, None] + ctr_y[:, None]

pred_w = torch.exp(dw) * widths[:, None]

pred_h = torch.exp(dh) * heights[:, None]

pred_boxes = torch.zeros_like(deltas)

pred_boxes[:, 0::4] = pred_ctr_x - 0.5 * pred_w # x1

pred_boxes[:, 1::4] = pred_ctr_y - 0.5 * pred_h # y1

pred_boxes[:, 2::4] = pred_ctr_x + 0.5 * pred_w # x2

pred_boxes[:, 3::4] = pred_ctr_y + 0.5 * pred_h # y2

print("pred_boxes.shape", pred_boxes.shape)

return pred_boxes

Here are the configurations of the python environment:

# python working environment (1.5.1)

* virtualenv 15.0.1

python 3.6

torch 1.5.1

torchvision 0.6.1

onnx 1.10.2

onnxruntime 1.9.0

ubuntu 16.04

CUDA 10.2 (440.33.01, not used in test as so far)

In addition, I’m not sure whether it is a torch version issue, so I had another try with the following environment:

# python working environment (1.10.0)

* virtualenv 15.0.1

python 3.6

torch 1.10.0

torchvision 0.11.1

onnx 1.10.2

onnxruntime 1.9.0

ubuntu 16.04

CUDA 10.2 (440.33.01, not used in test as so far)

The export procedure was simply crashed in this working environment with the following gdb debug logs.

/media/WorkSpace/Development_Repository/Working/models/detectron2/modeling/poolers.py:73: TracerWarning: Using len to get tensor shape might cause the trace to be incorrect. Recommended usage would be tensor.shape[0]. Passing a tensor of different shape might lead to errors or silently give incorrect results.

(len(box_tensor), 1), batch_index, dtype=box_tensor.dtype, device=box_tensor.device

Thread 1 "python" received signal SIGSEGV, Segmentation fault.

0x00007fffc9e57c08 in std::_Function_handler<void (onnx_torch::InferenceContext&), onnx_torch::OpSchema onnx_torch::GetOpSchema<onnx_torch::ConstantOfShape_Onnx_ver9>()::{lambda(onnx_torch::InferenceContext&)#1}>::_M_invoke(std::_Any_data const&, onnx_torch::InferenceContext&) ()

from /media/WorkSpace/Development_Repository/Working/models/venv3_torch/lib/python3.6/site-packages/torch/lib/libtorch_cpu.so

So I stay with the 1.5.1 environment. Additionally, I tested this exported ONNX model in JAVA with DL4J and got the following (as same as python) errors:

2021-11-25 15:41:30.1671963 [I:onnxruntime:, inference_session.cc:230 onnxruntime::InferenceSession::ConstructorCommon::<lambda_dcdcfd37ad4a704b0bd7e98f885edfd8>::operator ()] Flush-to-zero and denormal-as-zero are off

2021-11-25 15:41:30.1672832 [I:onnxruntime:, inference_session.cc:237 onnxruntime::InferenceSession::ConstructorCommon] Creating and using per session threadpools since use_per_session_threads_ is true

WARNING: Since openmp is enabled in this build, this API cannot be used to configure intra op num threads. Please use the openmp environment variables to control the number of threads.

Exception caught java.lang.RuntimeException: Load model from C:\Users\ps\IdeaProjects\Demo_DL4J\src\main\resources\checkpoint_boa.onnx failed:Node (Gather_341) Op (Gather) [ShapeInferenceError] axis must be in [-r, r-1]

Question:

Could somebody help me to figure out what might be going on here? In this test, the model and data are launched on CPU. In order to narrow down the errors, I followed the steps as Exporting Fasterrcnn Resnet50 fpn to ONNX suggested I works in my test environment 1.51. However, I do not make it successfully on my own model. To make sure the inference works correctly, I used the same data that the model usually works well.

Any input will be appreciated!

.

.