Hello, I have to train n clients where each client has its own model

so I instantiated a model model=Net() and then created n copies:

models.append(copy.deepcopy(model))

after every batch I aggregate all weights and do the following:

models[i].load_state_dict(average_weights)

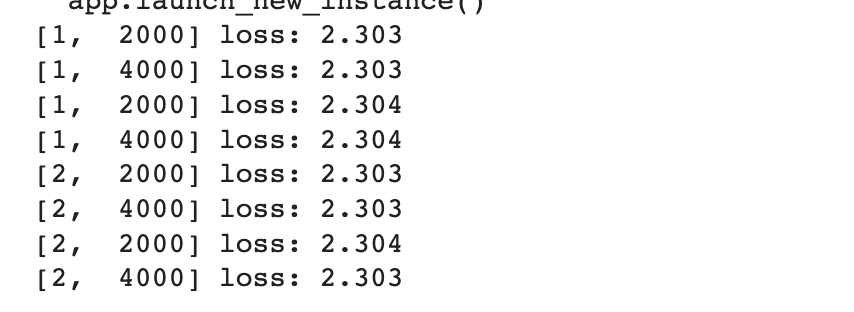

I noticed that there is no learning, and the loss gets stuck.

Any suggestion for this problem?