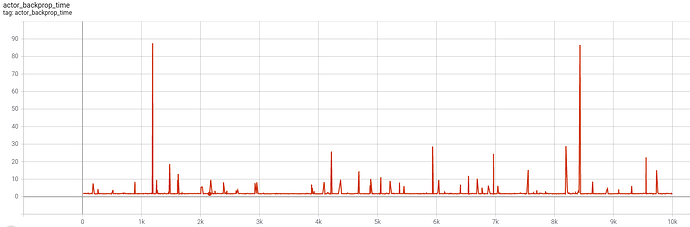

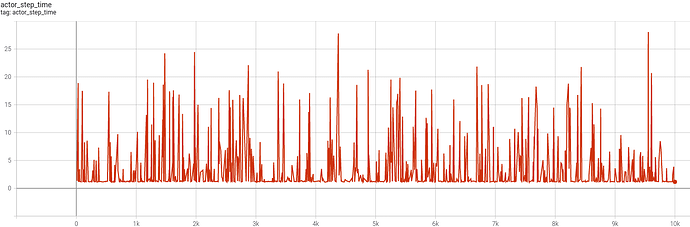

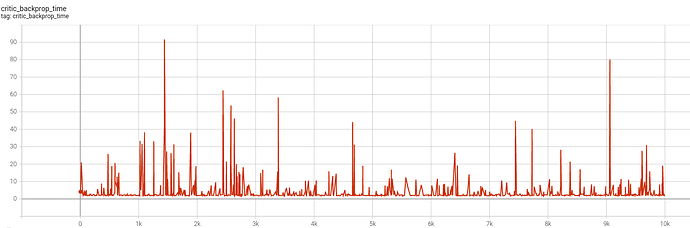

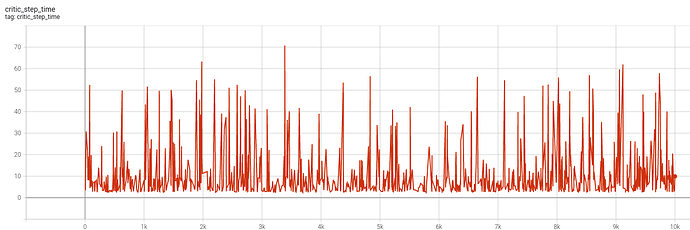

I am using Adam optimizer to train TD3 for offline RL setting. I saw significant increase in training time. Hence, I ran performance profiling for my code. What’s weird is backprop takes less time than update step. I ensured that I am properly using torch.cuda.synchronize. I’ve attached my code snippet for the same below.

Currently, using batch size of 256 for Hopper env and using pytorch 1.7.1.

Below is my code snippet for critic (temp1, temp2, temp3, temp4 are cuda events):

temp2.record()

critic_loss.backward()

temp3.record()

torch.cuda.synchronize()

critic_backprop_time = temp2.elapsed_time(temp3)

#print("critic_backprop_time", critic_backprop_time)

writer.add_scalar("critic_backprop_time", critic_backprop_time, self.total_it)

temp2.record()

self.critic_optimizer.step()

temp3.record()

torch.cuda.synchronize()

critic_step_time = temp2.elapsed_time(temp3)

#print("critic_step_time", critic_step_time)

writer.add_scalar("critic_step_time", critic_step_time, self.total_it)

Code snippet for actor:

temp4.record()

actor_loss.backward()

temp3.record()

torch.cuda.synchronize()

actor_backprop_time = temp4.elapsed_time(temp3)

#print("actor_backprop_time", actor_backprop_time)

writer.add_scalar("actor_backprop_time", actor_backprop_time, self.total_it)

temp4.record()

self.actor_optimizer.step()

temp3.record()

torch.cuda.synchronize()

actor_step_time = temp4.elapsed_time(temp3)

#print("actor_step_time", actor_step_time)

writer.add_scalar("actor_step_time", actor_step_time, self.total_it)

temp4.record()