Hi. I encountered “out of memory” when I tried to run the following program.

import os

import torch

os.environ["CUDA_VISIBLE_DEVICES"] = "3"

torch.cuda.init()

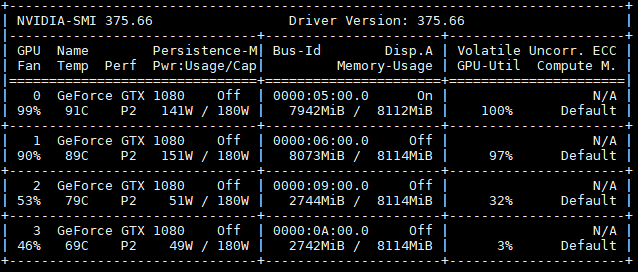

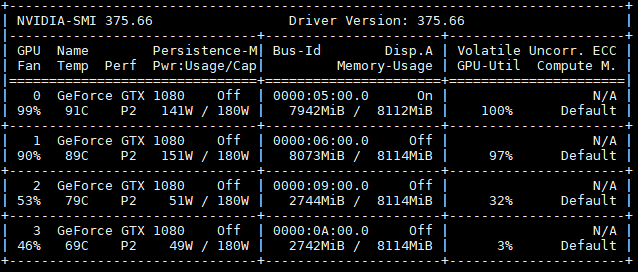

The GPU utilization is as follows. Please note that GPU0 is almost run out of memory, while GPU3 still has much space.

Do you have any clue about the problem? Thank you for your kindly help.

Did you update the NVIDIA drivers recently?

Sometimes this error is thrown, when the CUDA / NVIDIA drivers are in a bad state.

A restart usually solves the problem.

Alternatively, could you try to set another GPU as the visible device?

The GPU ids sometimes don’t match with what nvidia-smi shows.

Could you run export CUDA_DEVICE_ORDER=PCI_BUS_ID before the script?

Thank you, ptrblck. I tried export CUDA_DEVICE_ORDER=PCI_BUS_ID, then it successfully ran on the specified GPU.