Hi,

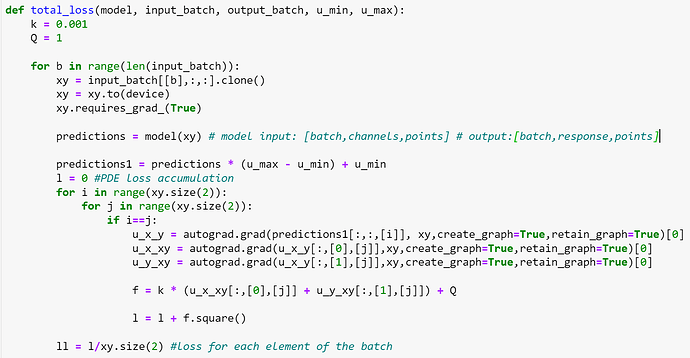

The following code is showing CUDA out of memory error. Is there any way to make this calculation computationally efficient? I also need to keep track of the computation graph so that the graph doesn’t break; as I need to backpropagate through it during training. Here, I am basically trying to calculate the partial differential equation loss where the right hand side is just zero. Many thanks!

You are most likely running OOM since you are explicitly keeping the computation graph with its intermediate activations alive via retain_graph=True.

Are you sure you need to keep it alive? Since you are recomputing u_x_y in each iteration I would speculate you might be able to delete the graphs after the gradients are computed.