I am trying to fine tune “Llama-2-7b-chat-hf” Model with “mlabonne/guanaco-llama2-1k” in Google Colab with T4 runtime environment. I am using Qlora technique to fine tune this model. Below is the code I am using.

import os import torch from datasets import load_dataset from transformers import ( AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig, HfArgumentParser, TrainingArguments, pipeline, logging, ) from peft import LoraConfig, PeftModel from trl import SFTTrainer # Model from Hugging Face hub base_model = "NousResearch/Llama-2-7b-chat-hf" # New instruction dataset guanaco_dataset = "mlabonne/guanaco-llama2-1k" # Fine-tuned model new_model = "llama-2-7b-chat-guanaco" # Load Dataset dataset = load_dataset(guanaco_dataset, split="train") compute_dtype = getattr(torch, "float16") quant_config = BitsAndBytesConfig( load_in_4bit=True, bnb_4bit_quant_type="nf4", bnb_4bit_compute_dtype=compute_dtype, bnb_4bit_use_double_quant=False, ) # Load base model model = AutoModelForCausalLM.from_pretrained( base_model, quantization_config=quant_config, device_map={"": 0} ) model.config.use_cache = False model.config.pretraining_tp = 1 # Load LLaMA tokenizer tokenizer = AutoTokenizer.from_pretrained(base_model, trust_remote_code=True) tokenizer.pad_token = tokenizer.eos_token tokenizer.padding_side = "right" # Load LoRA configuration peft_args = LoraConfig( lora_alpha=16, lora_dropout=0.1, r=64, bias="none", task_type="CAUSAL_LM", ) # Set training parameters training_params = TrainingArguments( output_dir="./results", num_train_epochs=1, per_device_train_batch_size=3, gradient_accumulation_steps=1, optim="paged_adamw_32bit", save_steps=25, logging_steps=25, learning_rate=2e-4, weight_decay=0.001, fp16=False, bf16=False, max_grad_norm=0.3, max_steps=-1, warmup_ratio=0.03, group_by_length=True, lr_scheduler_type="constant", report_to="tensorboard" ) # Set supervised fine-tuning parameters trainer = SFTTrainer( model=model, train_dataset=dataset, peft_config=peft_args, dataset_text_field="text", max_seq_length=None, tokenizer=tokenizer, args=training_params, packing=False, ) # Train model trainer.train()

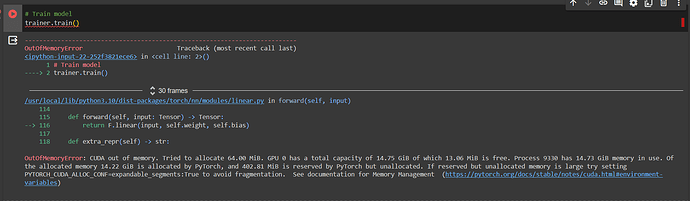

After running the last code, I was getting the below error.

When “per_device_train_batch_size=4”, I got this error.

And, “When per_device_train_batch_size=3”, I got this error.

same error occurred with different memory utilization.

I have tried with changing per_device_train_batch_size= to 3,4,5,6 but not working for me.

Also tried

# Set the environment variable

os.environ["PYTORCH_CUDA_ALLOC_CONF"] = "expandable_segments:True"

# Verify that the environment variable is set

print(os.environ["PYTORCH_CUDA_ALLOC_CONF"])

And lastly

torch.cuda.empty_cache()

Nothing worked for me.