I have 2 epochs with each around 150000 batches. I would like to output the evaluation every 10000 batches.

How can I do so?

My train loop:

best_valid_loss = float('inf')

train_losses=[]

valid_losses=[]

for epoch in range(params['epochs']):

print('\n Epoch {:} / {:}'.format(epoch + 1, params['epochs']))

#train model

train_loss = train(scheduler, optimizer)

#evaluate model

valid_loss = evaluate()

#save the best model

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), model_file)

# append training and validation loss

train_losses.append(train_loss)

valid_losses.append(valid_loss)

print(f'\nTraining Loss: {train_loss:.3f}')

print(f'Validation Loss: {valid_loss:.3f}')

This is the train() function called above:

def train(scheduler, optimizer):

t0 = datetime.datetime.utcnow()

model.train()

total_loss, total_accuracy = 0, 0

step_loss = 0

# iterate over batches

for step, batch in enumerate(train_data_loader):

# progress update after every 50 batches.

if step % 1000 == 0 and not step == 0:

# Calculate elapsed time in seconds.

elapsed = (datetime.datetime.utcnow() - t0).total_seconds()

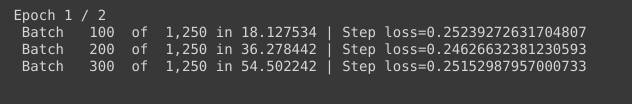

print(' Batch {:>5,} of {:>5,} in {}. Step loss={}'.format(step, len(train_data_loader), elapsed, step_loss / 100))

step_loss = 0

# push the batch to gpu

batch = [r.to(device) for r in batch]

textq, maskq, text1, mask1, text2, mask2, labels = batch

# clear previously calculated gradients

model.zero_grad()

# get model predictions for the current batch

v1, v2 = model(textq, maskq, text1, mask1, text2, mask2)

sim = cos_sim(v1, v2)

nan_count = torch.count_nonzero(torch.isnan(sim))

if nan_count.item() > 0:

print("Oops, have {} nans in similarity".format(nan_count))

# compute the loss between actual and predicted values

loss = cosine_embedding_loss(v1, v2, labels, margin=MARGIN)

# add on to the total loss

total_loss += loss.item()

step_loss += loss.item()

# backward pass to calculate the gradients

loss.backward()

# clip the the gradients to 1.0. It helps in preventing the exploding gradient problem

torch.nn.utils.clip_grad_norm_(model.parameters(), 1.0)

# update parameters

optimizer.step()

scheduler.step()

# compute the training loss of the epoch

avg_loss = total_loss / len(train_data_loader)

#returns the loss

return avg_loss

I added the code block outside of the loop so it did not catch it.

I added the code block outside of the loop so it did not catch it.  Now everything works, thank you!

Now everything works, thank you!