Hi!

May I get some advice for the following situation please?:

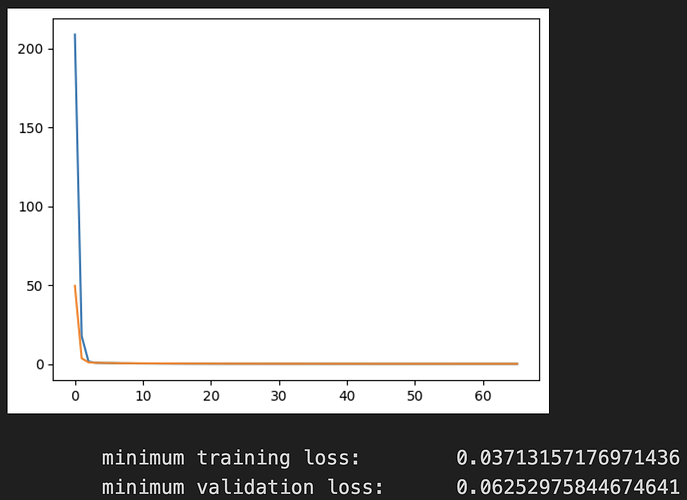

I’m doing cross-validation. In each cross-validation round, I return the lowest training loss and the lowest validation loss of that epoch. Then, I choose the model for testing by lowest validation loss.

The problem is: while my test loss (0.05) is close to the validation loss (0.04), in each validation round, my validation error is always 1.5 to 2 times that of the training error.

My question is:

1 Is this overfitting? It looks like my model overfitting for the cross-validation round (because training loss much smaller than testing loss), but not-very-overfitting for the testing round (because the validation loss is close to testing loss))? This is confusing because the data used for testing and cross-validation are the same. Perhaps the model just generalizes poorly in general?

2 What should I do? My model is for regression, with input being a long 1d vector and output being a single number. (I have early stopping)

I used dropout but that made the model converge at high loss

I tried to ‘strengthen’ the regularization by reducing early stopper patience from 5 to 3, and got higher loss again