Hi,

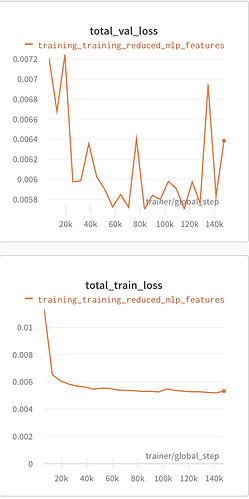

the concept of overfitting and undercutting is still quite confusing to me. Is this plot of training/validation loss below overfitting or underfitting?

I would claim it’s neither as the validation loss is still “close” to the training loss, doesn’t change a lot and is thus also quite noisy. Plot both curves into the same plot and the gap should be small, if I interpret the values correctly.

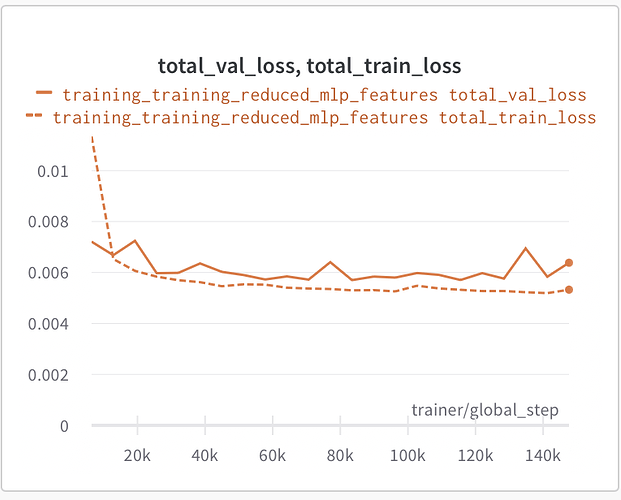

Thanks for your response. This is both plots together. So can this be considered a “reasonable” loss and means the model is actually learning?

The model reduced the loss initially at least and might not be stuck on a plateau. You might want to continue with the training to see if the loss would still decrease or not.

There is also learning schedulers that may be useful later on

Im using pytorch lightning EarlyStopping with a patience of 8, so I guess the training stopped when the validation loss wasn’t improving?

Is this similar to using Early stopping with pytorch lightning?

I try to keep deps to the minimum and haven’t used lightining, but I think it is not. Early Stopping does not change the learning rate, but stops the training process when the validation loss is stagnant.