I am trying to do padding a 2D matrix, when I use ConstantPad2d, it works fine for 4D but it is not working for 3D/2D.

import torch.nn as nn

import torch.nn.functional as F

from torch.autograd import Variable

from torch import autograd

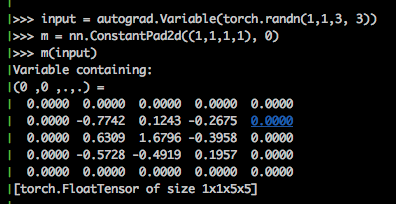

input = autograd.Variable(torch.randn(1,1,3, 3))

m = nn.ConstantPad2d((1,1,1,1), 0)

output = m(input)

print(output)

Can anyone help me here.

I tried the other methods in the forum but couldn’t make them work.

basically this does not work:

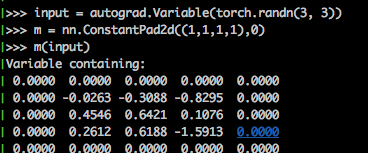

input = autograd.Variable(torch.randn(3, 3))

m = nn.ConstantPad2d((1,1,1,1), 0)

output = m(input)

print(output)

What exactly does your output look like?

I ran the code and it looks like it does what it’s supposed to:

1 Like

That’s weird:

I get this error:

---------------------------------------------------------------------------

NotImplementedError Traceback (most recent call last)

<ipython-input-8-764c673fd541> in <module>()

10

11 m = nn.ConstantPad2d((1,1,1,1), 0)

---> 12 output = m(input)

13 print(output)

/usr/local/lib/python2.7/dist-packages/torch/nn/modules/module.pyc in __call__(self, *input, **kwargs)

222 for hook in self._forward_pre_hooks.values():

223 hook(self, input)

--> 224 result = self.forward(*input, **kwargs)

225 for hook in self._forward_hooks.values():

226 hook_result = hook(self, input, result)

/usr/local/lib/python2.7/dist-packages/torch/nn/modules/padding.pyc in forward(self, input)

178

179 def forward(self, input):

--> 180 return F.pad(input, self.padding, 'constant', self.value)

181

182 def __repr__(self):

/usr/local/lib/python2.7/dist-packages/torch/nn/functional.pyc in pad(input, pad, mode, value)

1043 return _functions.thnn.ReplicationPad3d.apply(input, *pad)

1044 else:

-> 1045 raise NotImplementedError("Only 4D and 5D padding is supported for now")

1046

1047

NotImplementedError: Only 4D and 5D padding is supported for now

torch.__version__

gives me:

‘0.2.0_3’

1 Like

Looks like the functionality was recently implemented. You could build pytorch from source if you need it (instructions here), or wait for the next release.

Yeah I guess so, I hope they fix it on the new release!

bummer