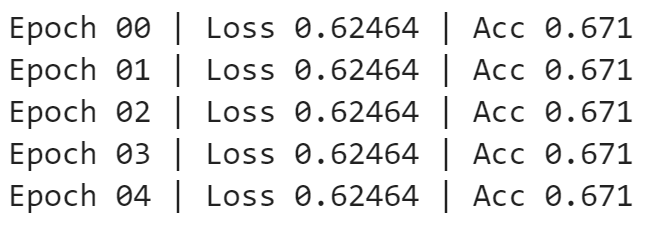

Hello, I’m starting to use PyTorch and want to implement a simple working example of a binary classification. I have some data that is pre-processed with pandas, then transform the training and testing sets to tensors. I create a class with 2 hidden layers with ReLU activation functions, and then a single output (logit). I define the optimizer to be Adam with the model parameters, and the loss function BCEWithLogitLoss. When I create the instance the parameters are uniformly distributed, but when I do the training process the loss is not decreased, and all model parameters remain the same. There is no missing data on the tensors, and I made sure that with the untrained instance of the network the architecture would give a single output as expected.

I suspect the error comes from the loss.backward() or optimizer.step() steps, here is a sample of the data, and the code

# Define tensors to train the model

x_train_t = torch.tensor(x_train.values, dtype=torch.float)

x_test_t = torch.tensor(x_test.values, dtype=torch.float)

y_train_t = torch.tensor(y_train.values, dtype=torch.float)

y_test_t = torch.tensor(y_test.values, dtype=torch.float)

class NetClassifier(nn.Module):

def __init__(self,hidden1,hidden2):

super(NetClassifier,self).__init__()

self.model = nn.Sequential(

nn.Linear(13,hidden1),

nn.ReLU(),

nn.Linear(hidden1,hidden2),

nn.ReLU(),

nn.Linear(hidden2,1)

)

def forward(self,x):

x = self.model(x)

return x

# Create an instance of the class

net = NetClassifier(50,50)

print(net)

# Create DataSet and DataLoaders to train the neural network

EPOCHS = 5

BATCH_SIZE=32

LEARNING_RATE = 0.01

train_data_set = TensorDataset(x_train_t,y_train_t)

test_data_set = TensorDataset(x_test_t,y_test_t)

train_dataloader = DataLoader(train_data_set,batch_size = BATCH_SIZE)

test_dataloader = DataLoader(test_data_set,batch_size = BATCH_SIZE)

# Define optimizer and loss function

optimizer = optim.Adam(net.parameters(),lr=LEARNING_RATE)

loss_function = nn.BCEWithLogitsLoss()

for epoch in range(EPOCHS):

epoch_loss=0

epoch_acc=0

for xb,yb in train_dataloader:

# Zero gradients for training

optimizer.zero_grad()

# Use current model parameters to predict output

y_pred = net(xb)

# Turn probabilities into prediction

pred = torch.round(torch.sigmoid(torch.flatten(y_pred)))

# Calculate loss, use float type to calculate loss

loss = loss_function(yb,pred)

# Backpropagate

loss.backward()

# Step in the optimizer

optimizer.step()

epoch_loss+=loss.item()

epoch_acc+= (yb == pred).float().mean()

# Print epoch loss

print("Epoch {:>02d} | Loss {:.5f} | Acc {:.3f}".format(epoch,epoch_loss/len(train_dataloader),epoch_acc/len(train_dataloader)))