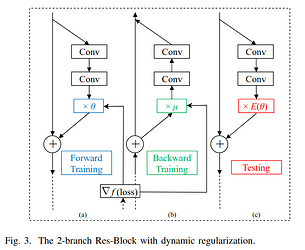

Hi guys. I want to implement a dynamic regularization proposed in “Convolutional Neural Networks with Dynamic Regularization”. I’m new to custom forward and backward pass using autograd and I found code references from Shake-Shake and Shake-Drop methods mentioned by the author.

To implement the dynamic regularization, the difference between the training losses is passed to the forward and backward training. My question is, how do I pass this variable to autograd function? Both previous methods and autograd examples only use the grad_output, but the proposed method pass other values as well.