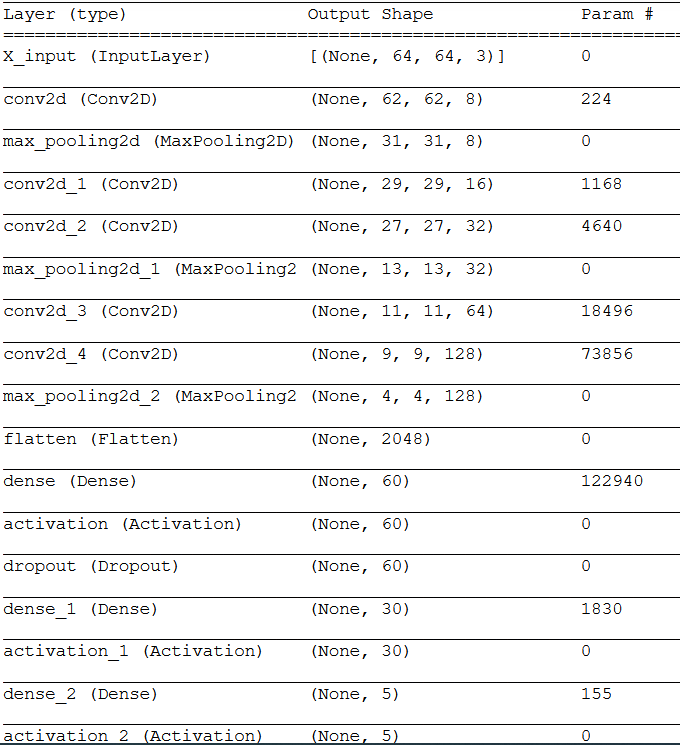

Hello, I am new to Pytorch (third day using it) and I am finding some trouble converting a model I had in keras. The model is the following:

X_input = Input(shape = (resized_image_size, resized_image_size, 3), name = ‘X_input’)

X = Conv2D(8, (3, 3), strides = (1,1) , padding = 'valid')(X_input)

X = MaxPooling2D(pool_size = (2, 2))(X)

X = Conv2D(16, (3, 3), strides = (1,1) , padding = 'valid')(X)

X = Conv2D(32, (3, 3), strides = (1,1) , padding = 'valid')(X)

X = MaxPooling2D(pool_size = (2, 2))(X)

X = Conv2D(64, (3, 3), strides = (1,1) , padding = 'valid')(X)

X = Conv2D(128, (3, 3), strides = (1,1) , padding = 'valid')(X)

X = MaxPooling2D(pool_size = (2, 2))(X)

X = Flatten()(X)

X = Dense(num_classes * 8 + 20, input_shape = (29 * 29 * 16, 1))(X)

X = Activation('relu')(X)

X = Dropout(0.4)(X)

X = Dense(num_classes * 4 + 10)(X)

X = Activation('relu')(X)

X = Dense(num_classes)(X)

X = Activation('softmax')(X)

What I have so far in Pytorch is the following:

class NeuralNetwork(nn.Module):

def init(self):

super(NeuralNetwork, self).init()

self.flatten = nn.Flatten()

self.l1 = nn.Sequential(

nn.Conv2d(1,8,(3,3),1,1),

nn.MaxPool2d(2,2))

self.l2 = nn.Sequential(

nn.Conv2d(8,16,(3,3),1,1),

nn.Conv2d(16,32,(3,3),1,1),

nn.MaxPool2d(2,2))

self.l3 = nn.Sequential(

nn.Conv2d(32,64,(3,3),1,1),

nn.Conv2d(64,128,(3,3),1,1),

nn.MaxPool2d(2,2))

self.l4 = nn.Sequential(

nn.Linear(,),

nn.ReLU(),

nn.Dropout(0.4))

self.l5 = nn.Sequential(

nn.Linear(,),

nn.ReLU())

self.l6 = nn.Sequential(

nn.Linear(,),

nn.Softmax())

def forward(self, x):

x = self.l1(x)

x = self.l2(x)

x = self.l3(x)

x = self.flatten(x)

x = self.l4(x)

x = self.l5(x)

x = self.l6(x)

return x

model = NeuralNetwork().to(device)

I would like to know if the first layers (with the convolutions) are ok and how to calculate the features for Linear since I read that it is the substitute to keras’ Dense.