Hi guys, I want to do forward pass of my pytorch models that is defined in python with C++ and pybind. I followed the tutorial on cuda/C++ extension here. With that I was able to:

- Wrote ext.cpp where the C++ function for forward pass resides

ext.cpp

#include <torch/extension.h> // One-stop header.

#include

#include

#includeat::Tensor extforward(torch::nn::Module *model, torch::Tensor x)

{

for(const auto submod : model->modules())

//Looping through the network’s every submodule and applying forward to input

{

x = submod->astorch::nn::AnyModule()->forward(x);

}

return x;

}PYBIND11_MODULE(TORCH_EXTENSION_NAME, m){

m.def(“forw”, &extforward, “CPP forward”);

}

- Build a setup.py that compiles the C++ function and make an API sucessfully

setup.py

from setuptools import setup, Extension

from torch.utils import cpp_extensionlibname = ‘ext’

setup(name=libname,

ext_modules=[cpp_extension.CppExtension(libname, [‘ext.cpp’])],

cmdclass={‘build_ext’: cpp_extension.BuildExtension})print(cpp_extension.BuildExtension)

- Wrote test.py that builds a simple network and try to do forward pass with c++ function

test.py

import torch

import torch.nn as nn

import torch.nn.functional as F

import ext as extclass Net(nn.Module):

def __init__(self): super(Net, self).__init__() # 1 input image channel, 6 output channels, 3x3 square convolution # kernel self.conv1 = nn.Conv2d(1, 6, 3) self.conv2 = nn.Conv2d(6, 16, 3) # an affine operation: y = Wx + b self.fc1 = nn.Linear(16 * 6 * 6, 120) # 6*6 from image dimension self.fc2 = nn.Linear(120, 84) self.fc3 = nn.Linear(84, 10) def forward(self, x): # Max pooling over a (2, 2) window x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2)) # If the size is a square you can only specify a single number x = F.max_pool2d(F.relu(self.conv2(x)), 2) x = x.view(-1, self.num_flat_features(x)) x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x def num_flat_features(self, x): size = x.size()[1:] # all dimensions except the batch dimension num_features = 1 for s in size: num_features *= s return num_featuresif name == “main”:

net = Net()

x = torch.randn(1, 1, 32, 32)

ext.forw(net,x)

Running Instruction:

- python setup.py --install

- python test.py

Issue:

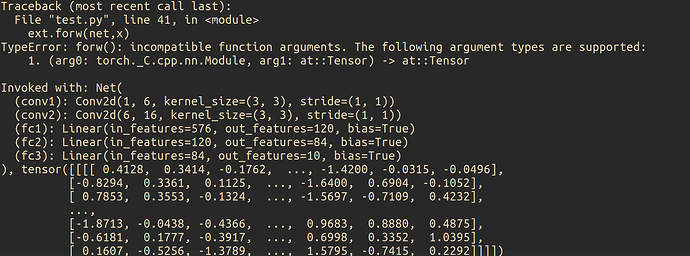

- The C++ API does not recognize python’s custom network’s type as seen below:

Other Details:

- System: Ubuntu 18.04

- Environment: virtual env with python3.6

- Pytorch version: 1.5.1 stable

I know we can use torch jit script, but I feel like torch::nn::Module has much capability that torch::script::module do not have. Would there be anyways to sort of extract all the information from torch.nn.Module into torch::nn::Module? Thanks in advance for all your feedback guys! ![]()