Hello !

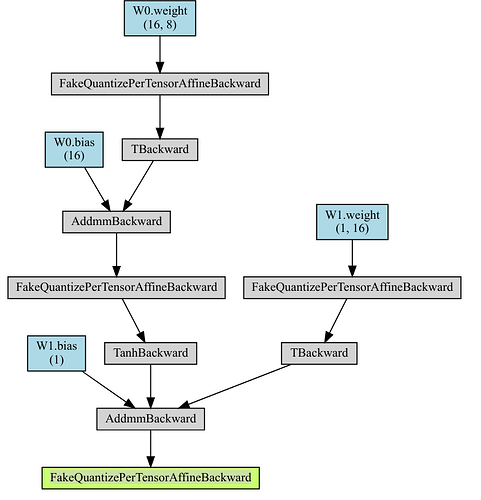

I can’t understand where I have the error, in the configuration I write that I want fake per_tensor_symmetric quantization, but when I display the picture of the graph, he writes that I have a FakeQuantizePerTensorAffineBackward node.

What am I doing wrong?

Thank you in advance!

import torch

from torch import nn

from torchviz import make_dot

model = nn.Sequential()

model.add_module('W0', nn.Linear(8, 16))

model.add_module('tanh', nn.Tanh())

model.add_module('W1', nn.Linear(16, 1))

model.qconfig = torch.quantization.QConfig(activation=torch.quantization.FakeQuantize.with_args(observer=torch.quantization.MinMaxObserver,

quant_min=0,

quant_max=255,

qscheme=torch.per_tensor_symmetric,

dtype=torch.quint8,

reduce_range=False),

weight=torch.quantization.FakeQuantize.with_args(observer=torch.quantization.MinMaxObserver, quant_min=-128, quant_max=127,

dtype=torch.qint8, qscheme=torch.per_tensor_symmetric, reduce_range=False))

torch.quantization.prepare_qat(model, inplace=True)

x = torch.randn(1,8)

make_dot(model(x), params=dict(model.named_parameters()))