Hello.

My question is about image reconstruction and Perceptual Loss and normalization.

The question is a little long, but I think this is an interesting question if you are interested in image reconstruction and Perceptual Loss and feature extraction.

I am making Image restoration model.

The model restores the image from the damaged image to the original image.

[damaged image]

[original image]

To do it well, I decided to use perceptual Loss.

I use pytorch’s vgg19 as the feature extractor.

I normalize image [0:255] to [-1:1] with the input of my model, because i am using tanh() activate function.

The pytorch’s vgg19 model is using [0:1] image.

So I normalize it [-1:1] to [0:1] just before entering the Perceptual Loss.

But I found something strange in this part.

I observed that the training results differed depending on where they were normalized.

Below link is the code.

I’ve marked the number (1) and (2) on the document.

That (1) and (2) mean normalization position.

And I found that the model’s result differed when doing normalization in 1) and in (2).

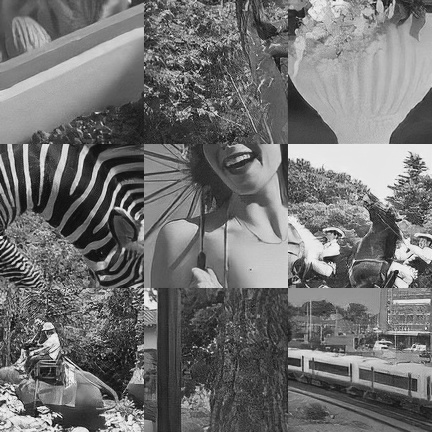

This is the result when the normalization position is in (1) after sufficient training.

I feel something very bright in the picture…

(

This is the result when the normalization position is in (2) after sufficient training.

This result is closer to the original than the (1) result.

What is the difference between (1) and (2)?

When (1), normalization is in nn.Module.

When (2), normalization is outside nn.Module.

I guess this is the reason…?

Thank you.