I have a basic classification model:

class BNN(nn.Sequential):

def __init__(self):

super().__init__()

self.add_module("Linear", nn.Linear(len(columns), 40))

self.add_module("ReLU", nn.ReLU(inplace=True))

self.add_module("Linear2", nn.Linear(40, 1))

self.add_module("Sigmoid", nn.Sigmoid())

and if i train and then evaluate the model with data that I have applied the sklearn preprocessor to:

scaler = preprocessing.StandardScaler()

train_scaled = scaler.fit_transform(train[columns])

test_scaled = scaler.transform(test[columns])

evaluate_scaled = scaler.transform(evaluate[columns])

the performance is fine / great.

However if I turn the model into:

class BNN(nn.Sequential):

def __init__(self):

super().__init__()

self.add_module("Norm", nn.BatchNorm1d(len(columns))) # Layer in question

self.add_module("Linear", nn.Linear(len(columns), 40))

self.add_module("ReLU", nn.ReLU(inplace=True))

self.add_module("Linear2", nn.Linear(40, 1))

self.add_module("Sigmoid", nn.Sigmoid())

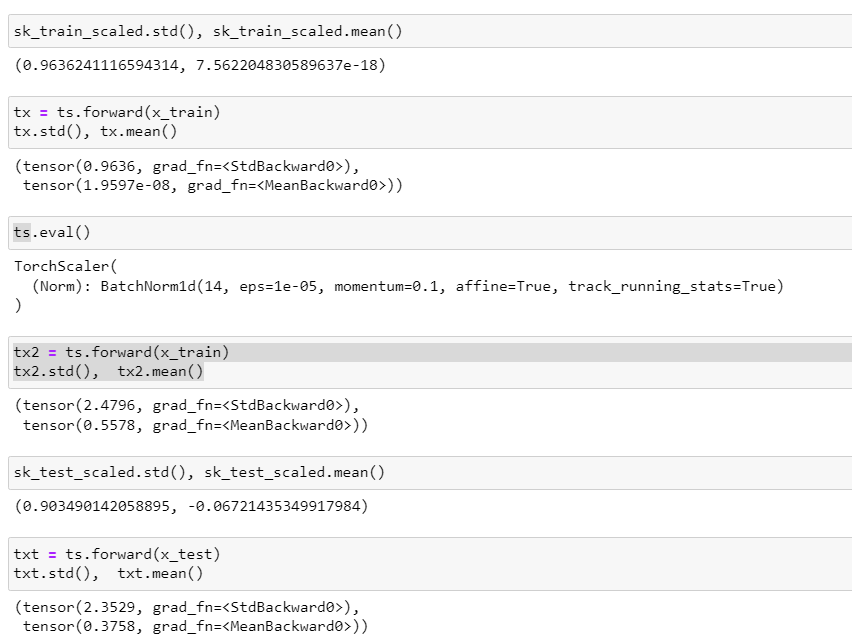

and don’t preprocess the data, the performance degrades very significantly.

Is this possible because the batchedNorm only sees batches and would be expected to under perform (a bit) or am I using it wrong? Do i need to do something special because I have balanced training data (upsampled) but imbalanced test and evaluation data (1% positive class)

How would a network that just learned the BatchNorm1d layer look?