I called torch.set_num_threads(8) to use all my cpu cores.

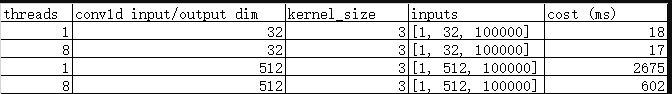

The result shows that running big conv, the performance is obvious faster on 8 threads compared with single thread. But runs small conv, the speed has no big differences with 8 threads and single thread.

Please check this table

Mkl-dnn is enabled and below is the verbose info when running

mkldnn_verbose,exec,reorder,jit:uni,undef,in:f32_nchw out:f32_nChw8c,num:1,1x32x1x107718,2.18091

mkldnn_verbose,exec,reorder,jit:uni,undef,in:f32_oihw out:f32_OIhw8i8o,num:1,32x32x1x1,0.00195312

mkldnn_verbose,exec,convolution,jit_1x1:avx2,forward_training,fsrc:nChw8c fwei:OIhw8i8o fbia:x fdst:nChw8c,alg:convolution_direct,mb1_ic32oc32_ih1oh1kh1sh1dh0ph0_iw107718ow107718kw1sw1dw0pw0,3.87793

mkldnn_verbose,exec,reorder,jit:uni,undef,in:f32_nChw8c out:f32_nchw,num:1,1x32x1x107718,4.69116

So why 8 threads is not obviously faster than 1 thread with small conv? And how can i improve the speed on small conv?

Thanks a lot!

My code

import time

import torch

import torch.nn as nn

from torch.nn.utils import weight_norm

class MyConv(nn.Module):

def __init__(self, *args, **kwargs):

super().__init__()

self.cell = nn.Conv1d(*args, **kwargs)

self.cell.weight.data.normal_(0.0, 0.02)

def forward(self, x):

return self.cell(x)

def main():

#print(*torch.__config__.show().split("\n"), sep="\n")

torch.set_num_threads(1)

dim = 32

kernels = 3

seq = 100000

MyCell = MyConv(dim, dim, kernel_size=kernels, stride=1)

MyCell.eval()

inputs = []

iter = 1000

for i in range(iter):

inputs.append(torch.rand(1, dim, seq))

start = time.time() * 1000

for i in range(iter):

print(i)

y = MyCell(inputs[i])

#print(y)

end = time.time() * 1000

print('cost %d ms per iter\n' % ((end - start) / iter))

if __name__ == "__main__":

main()