I am trying to train a joint model for a crowd counting network, I ma computing two losses, one is loss_predict (depends on counter output and refiner output), one is loss_refine(depends on refiner output and ground truth). I want to train the model in a way that : Counter is updated using loss_predict only, but Refiner is updated using a weighted sum of loss_predict & loss_refine. So i am trying to train them one by one, first the counter then refiner. But getting the error message as :

" Trying to backward through the graph a second time (or directly access saved tensors after they have already been freed). Saved intermediate values of the graph are freed when you call .backward() or autograd.grad(). Specify retain_graph=True if you need to backward through the graph a second time or if you need to access saved tensors after calling backward." I also tried using retain_graph = True. Still the error says, some component needed to compute gradient is updated, so error.

Any suggestion how to resolve this?? Any help will be appreciated.

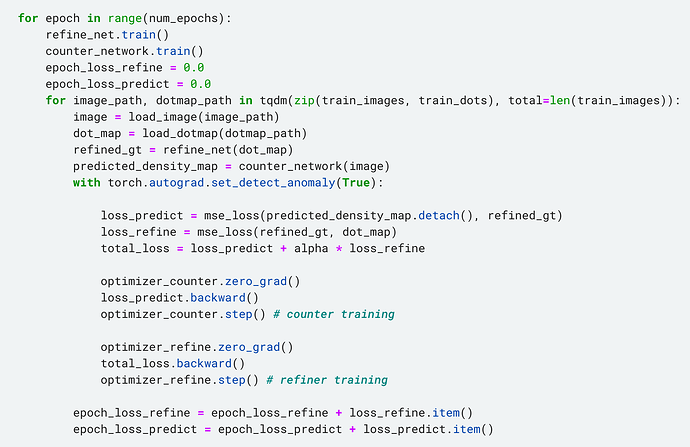

Training code :