Dear experts,

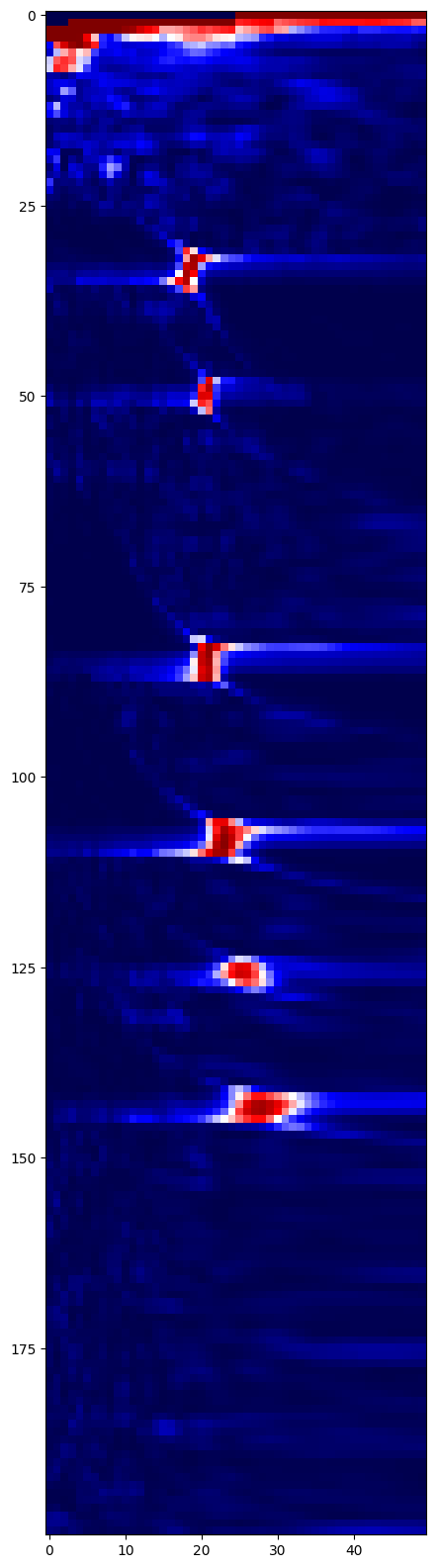

I’m trying to train data (velocity spectrum panel; sgy file) to coordinates (velocity structure profile; csv file) through a simple CNN by PyTorch. In order to test whether the training model is working or not, I tested with 10 data with its labels.

But it yields a weird result of:

Epoch 1

Test Error:

Accuracy: 0.0%, Avg loss: nanEpoch 2

loss: nan [ 1/ 10]

Test Error:

Accuracy: 0.0%, Avg loss: nan…

Epoch 10

loss: nan [ 1/ 10]

Test Error:

Accuracy: 0.0%, Avg loss: nanDone!

And if I change “batch_size=1” to “batch_size=3”, it will show the following error:

Epoch 1

loss: nan [ 3/ 10]

RuntimeError Traceback (most recent call last)

in <cell line: 144>()

145 print(f"Epoch {t+1}\n-------------------------------")

146 train_loop(train_dataloader, model, loss_fn, optimizer)

→ 147 test_loop(test_dataloader, model, loss_fn)

148 print(“Done!”)in test_loop(test_dataloader, model, loss_fn)

135 pred = model(data)

136 test_loss += loss_fn(pred, label).item()

→ 137 correct += (pred.argmax(1) == label).type(torch.float).sum().item()

138

139 test_loss /= num_batchesRuntimeError: The size of tensor a (3) must match the size of tensor b (40) at non-singleton dimension 1

How can I change my code for making the training works? And how to make my code works no matter what number is in “batch_size=”?

The data and label is like:

print(data)

print(data.shape)

tensor([[[0.0000, 0.0000, 1.0000, …, 0.0184, 0.0348, 0.0492],

[0.0000, 0.0000, 1.0000, …, 0.0442, 0.0363, 0.0250],

[0.0000, 0.0000, 1.0000, …, 0.0564, 0.0388, 0.0295],

…,

[1.0000, 0.9606, 0.8394, …, 0.0093, 0.0152, 0.0153],

[1.0000, 0.9524, 0.8419, …, 0.0091, 0.0151, 0.0160],

[1.0000, 0.9305, 0.8363, …, 0.0093, 0.0146, 0.0157]]])

torch.Size([1, 50, 200])

print(label)

print(label.shape)

tensor([ 178., 1878., 822., 1814., 1375., 2162., 1669., 2304., 2065., 2736.,

2528., 2780., 2836., 3008., 3396., 3490., 4013., 3518., nan, nan,

nan, nan, nan, nan, nan, nan, nan, nan, nan, nan,

nan, nan, nan, nan, nan, nan, nan, nan, nan, nan],

dtype=torch.float64)

torch.Size([40])

The full code would be:

# custom dataset <- revised from "https://pytorch.org/tutorials/beginner/data_loading_tutorial.html"

import torch

from torch.utils.data import Dataset

import pandas as pd

import os

import segyio

import numpy as np

class cvspanel_dataset(Dataset):

def __init__(self, csv_file, root_dir, transform=None):

self.dv_label = pd.read_csv(csv_file)

self.root_dir = root_dir

self.transform = transform

def __len__(self):

return len(self.dv_label)

def __getitem__(self, idx):

if torch.is_tensor(idx):

idx = idx.tolist()

data_name = os.path.join(self.root_dir,

self.dv_label.iloc[idx, 0])

gth = segyio.open(data_name, ignore_geometry=True)

data = gth.trace.raw[:]

data = torch.tensor(data[:, :200])

data = data.unsqueeze(0)

arr = self.dv_label.iloc[idx, 1:]

arr = np.asarray(arr)

label = arr.astype('float').reshape(-1, 2)

label = torch.tensor(label)

label = label.view([-1, 1])

label = label.squeeze()

if self.transform:

data = self.transform(data)

if self.transform:

label = self.transform(label)

return data, label

train_dataset = cvspanel_dataset(csv_file='/content/drive/MyDrive/Colab Notebooks/research_data/synthetic_1D/d-v_label.csv',

root_dir='/content/drive/MyDrive/Colab Notebooks/research_data/synthetic_1D/sgy_cvs_panel',

transform=None)

test_dataset = cvspanel_dataset(csv_file='/content/drive/MyDrive/Colab Notebooks/research_data/synthetic_1D/d-v_label.csv',

root_dir='/content/drive/MyDrive/Colab Notebooks/research_data/synthetic_1D/sgy_cvs_panel',

transform=None)

# dataloader

batch_size = 1

train_dataloader = torch.utils.data.DataLoader(train_dataset,

batch_size)

test_dataloader = torch.utils.data.DataLoader(test_dataset,

batch_size)

# model building

import torch

import torch.nn as nn

class CNN(nn.Module):

def __init__(self):

super(CNN, self).__init__()

self.layer1 = nn.Sequential(

nn.Conv2d(in_channels=1, out_channels=32, kernel_size=17, stride=1, padding=3),

nn.BatchNorm2d(32),

nn.ReLU(),

nn.MaxPool2d(kernel_size=10, stride=5, padding=0)

)

self.layer2 = nn.Sequential(

nn.Conv2d(in_channels=32, out_channels=64, kernel_size=6, stride=1, padding=2),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2, stride=3, padding=1)

)

self.fc1 = nn.Linear(in_features=64*3*13, out_features=512)

self.drop = nn.Dropout(0.25)

self.fc2 = nn.Linear(in_features=512, out_features=128)

self.fc3 = nn.Linear(in_features=128, out_features=40)

def forward(self, x):

out = self.layer1(x)

out = self.layer2(out)

out1 = out.view(out.size(0), -1)

out = self.fc1(out1)

out = self.drop(out)

out = self.fc2(out)

out = self.fc3(out)

return out

# hyperparameter

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

model = CNN();

model.to(device)

learning_rate = 0.001;

loss_fn = nn.CrossEntropyLoss();

optimizer = torch.optim.Adam(model.parameters(), lr=learning_rate);

# Training <- revised from "https://pytorch.org/tutorials/beginner/basics/optimization_tutorial.html"

def train_loop(train_dataloader, model, loss_fn, optimizer):

size = len(train_dataloader.dataset)

model.train()

for batch, (data, label) in enumerate(train_dataloader):

# Compute prediction and loss

pred = model(data)

loss = loss_fn(pred, label)

# Backpropagation

loss.backward()

optimizer.step()

optimizer.zero_grad()

if batch % 100 == 0:

loss, current = loss.item(), (batch + 1) * len(data)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

def test_loop(test_dataloader, model, loss_fn):

model.eval()

size = len(test_dataloader.dataset)

num_batches = len(test_dataloader)

test_loss, correct = 0, 0

with torch.no_grad():

for data, label in test_dataloader:

pred = model(data)

test_loss += loss_fn(pred, label).item()

correct += (pred.argmax(1) == label).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")

epochs = 10

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train_loop(train_dataloader, model, loss_fn, optimizer)

test_loop(test_dataloader, model, loss_fn)

print("Done!")

I’m looking forward your help.

Thank you in advance.