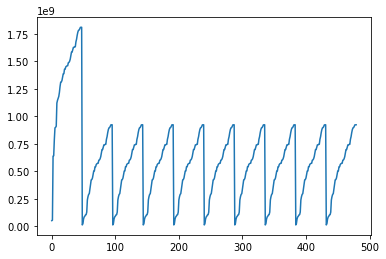

I am trying to plot my loss vs epoch graph to determine a good number of epochs to use but I am coming across a graph that looks like this and I don’t know how to fix it.

Here is my code:

import torch

import numpy as np

from torch import nn

from torch import optim

import random

from torch.utils.data import TensorDataset

from torch.utils.data import DataLoader

import pandas as pd

import matplotlib.pyplot as plt

# Creating Simple Data

def f(x):

y = (x**2) + 2

return y

X = []

for i in range(60):

X.append(random.randint(0,500))

y = []

for x in X:

y.append(f(x))

X = np.array(X)

y = np.array(y)

split = int(0.8*len(X))

X_train, X_test = X[:split], X[split:]

y_train, y_test = y[:split], y[split:]

X_train, X_test = torch.Tensor(X_train), torch.Tensor(X_test)

y_train, y_test = torch.Tensor(y_train), torch.Tensor(y_test)

train_dataset = TensorDataset(X_train, y_train)

train_dataloader = DataLoader(train_dataset, batch_size = 1)

test_dataset = TensorDataset(X_test, y_test)

test_dataloader = DataLoader(test_dataset, batch_size = 5)

device = 'cuda' if torch.cuda.is_available() else 'cpu'

input_dim = 1

hidden_dim = 1

output_dim = 1

class NeuralNetwork(nn.Module):

def __init__(self, input_dim, hidden_dim, output_dim):

super(NeuralNetwork, self).__init__()

# Define and instiante layers

self.hidden1 = nn.Linear(input_dim,hidden_dim)

self.hidden_activation = nn.ReLU()

self.out = nn.Linear(hidden_dim, output_dim)

# Defines what order to inputs will go into each layer

def forward(self, x):

y = self.hidden1(x)

y = self.hidden_activation(x)

# no activation function for output of a regression problem

y = self.out(x)

return y

# Creating model, putting model in neural network

model = NeuralNetwork(input_dim, hidden_dim, output_dim).to(device)

learning_rate = 1.e-6

loss_fn = nn.MSELoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

epochs = 10

loss_values = []

for epoch in range(epochs):

##### Training Data #####

model.train()

train_loss = 0

for i, (X, y) in enumerate(train_dataloader):

X, y = X.to(device), y.to(device)

y_pred = model(X)

loss = loss_fn(y_pred, y)

train_loss += loss.item()

optimizer.zero_grad()

loss.backward()

optimizer.step()

loss_values.append(train_loss/ len(train_dataset))

plt.plot(loss_values)