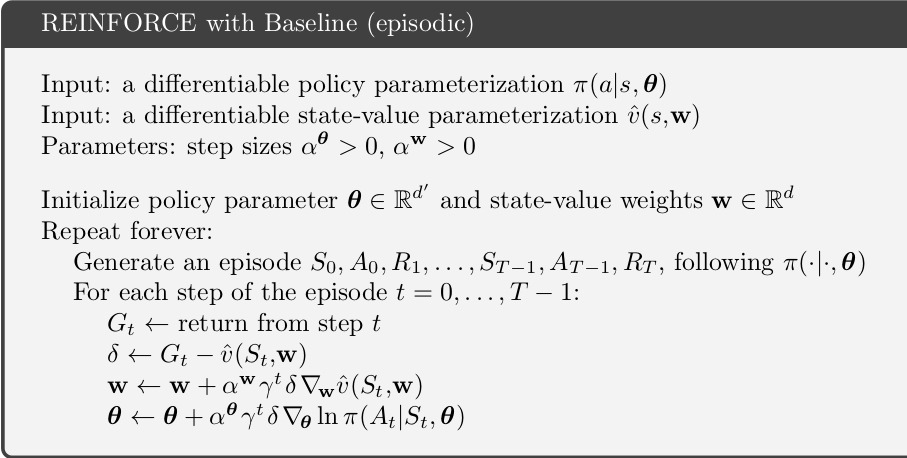

I am having trouble with the loss function corresponding to the REINFORCE with Baseline algorithm as described in Sutton and Barto book:

The last line is the update for the policy net.

Let gamma=1 for simplicity…

Now I want to construct loss function for the policy net output, so that I could backpropagate through it after playing one episode.

I am guessing it should be P_loss = - sum_over_episode(log_p*delta). The minus comes from the fact that in pytorch we minimize a loss function, but the update rule in the book tells us how to do gradient ascent for maximizing the objective.

I implemented training at each episode as follows:

# network

class MLP(nn.Module):

def __init__(self, input_dim, output_dim, hidden=256):

super().__init__()

self.fc1 = nn.Linear(input_dim, hidden)

self.fc2 = nn.Linear(hidden, hidden)

self.fc3 = nn.Linear(hidden, output_dim)

def forward(self, x):

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

q = F.relu(self.fc3(x))

q = F.log_softmax(q, dim=-1)

return q

def policy_loss(returns, baselines, log_p_taken):

loss = -torch.sum((returns - baselines)*log_p_taken)

return loss

when training:

for ep in range(N_ep):

...

self.optimizer_p.zero_grad()

log_P = P_net(ep_states) # P_net is an instance of MLP net declared above

log_P_taken = torch.gather(log_P, 1, ep_taken_actions.view(-1, 1)).squeeze()

P_loss = policy_loss(ep_returns, ep_baselines, log_P_taken)

P_loss.backward()

self.optimizer_p.step()

...

However, training seems to never converge with that loss, but trainin with P_loss_negative = - P_loss gives good results. Training with P_loss_negative passes cartpole_v0 and almost passes cartpole_v1, while training with P_loss always diverges.

Please help me find what is wrong. Thank you!!