I want to load the NSL_KDD dataset contained in this link with using the Python programming.

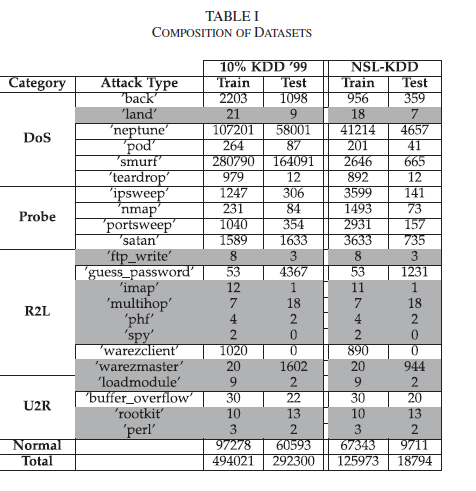

In this database, 22 features for training and testing data are classified into 5 separate classes(Normal, DOS, U2R, R2L, Probe)

But when I run this line of code y_test = pd.get_dummies(y_test), instead of being categorized into 5 classes, it shows me the same 22 features, while I did the same thing train data (target = pd.get_dummies(target) and crrocet result), it using for the test data.

with open(‘G:/RUN_PYTHON/kddcup.names.txt’, ‘r’) as infile:

kdd_names = infile.readlines()

kdd_cols = [x.split(’:’)[0] for x in kdd_names[1:]]

The Train+/Test+ datasets include sample difficulty rating and the attack class

kdd_cols += [‘class’, ‘difficulty’]

kdd = pd.read_csv(‘G:/RUN_PYTHON/KDDTrain+.txt’, names=kdd_cols)

kdd_t = pd.read_csv(‘G:/RUN_PYTHON/KDDTest+.txt’, names=kdd_cols)

#kdd = pd.read_csv(‘G:/RUN_PYTHON/kddcup.txt.data_10_percent_corrected’, names=kdd_cols)

#kdd_t = pd.read_csv(‘G:/RUN_PYTHON/kddcup.testdata.unlabeled_10_percent’, names=kdd_cols)

Consult the linked references for attack categories:

https://www.researchgate.net/post/What_are_the_attack_types_in_the_NSL-KDD_TEST_set_For_example_processtable_is_a_attack_type_in_test_set_Im_wondering_is_it_prob_DoS_R2L_U2R

The traffic can be grouped into 5 categories: Normal, DOS, U2R, R2L, Probe

or more coarsely into Normal vs Anomalous for the binary classification task

kdd_cols = [kdd.columns[0]] + sorted(list(set(kdd.protocol_type.values))) + sorted(list(set(kdd.service.values))) + sorted(list(set(kdd.flag.values))) + kdd.columns[4:].tolist()

attack_map = [x.strip().split() for x in open(‘G:/RUN_PYTHON/training_attack_types.txt’, ‘r’)]

attack_map = {x[0]: x[1] for x in attack_map if x}

Here we opt for the 5-class problem

kdd[‘class’] = kdd[‘class’].replace(attack_map)

kdd_t[‘class’] = kdd_t[‘class’].replace(attack_map)

def cat_encode(df, col):

return pd.concat([df.drop(col, axis=1), pd.get_dummies(df[col].values)], axis=1)

def log_trns(df, col):

return df[col].apply(np.log1p)

cat_lst = [‘protocol_type’, ‘service’, ‘flag’]

for col in cat_lst:

kdd = cat_encode(kdd, col)

kdd_t = cat_encode(kdd_t, col)

log_lst = [‘duration’, ‘src_bytes’, ‘dst_bytes’]

for col in log_lst:

kdd[col] = log_trns(kdd, col)

kdd_t[col] = log_trns(kdd_t, col)

kdd = kdd[kdd_cols]

for col in kdd_cols:

if col not in kdd_t.columns:

kdd_t[col] = 0

kdd_t = kdd_t[kdd_cols]

Now we have used one-hot encoding and log scaling

difficulty = kdd.pop(‘difficulty’)

target = kdd.pop(‘class’)

y_diff = kdd_t.pop(‘difficulty’)

y_test = kdd_t.pop(‘class’)

target = pd.get_dummies(target)

print(target)

y_test = pd.get_dummies(y_test)

print(y_test)

the output of target:

Out[27]:

dos normal probe r2l u2r

0 0 1 0 0 0

1 0 1 0 0 0

2 1 0 0 0 0

3 0 1 0 0 0

4 0 1 0 0 0

5 1 0 0 0 0

6 1 0 0 0 0

7 1 0 0 0 0

8 1 0 0 0 0

9 1 0 0 0 0

10 1 0 0 0 0

11 1 0 0 0 0

12 0 1 0 0 0

13 0 0 0 1 0

14 1 0 0 0 0

15 1 0 0 0 0

16 0 1 0 0 0

17 0 0 1 0 0

18 0 1 0 0 0

19 0 1 0 0 0

20 1 0 0 0 0

21 1 0 0 0 0

22 0 1 0 0 0

23 0 1 0 0 0

24 1 0 0 0 0

25 0 1 0 0 0

26 1 0 0 0 0

27 0 1 0 0 0

28 0 1 0 0 0

29 0 1 0 0 0

… … … … …

125943 0 1 0 0 0

125944 0 1 0 0 0

125945 0 1 0 0 0

125946 1 0 0 0 0

125947 0 0 1 0 0

125948 1 0 0 0 0

125949 0 1 0 0 0

125950 1 0 0 0 0

125951 0 1 0 0 0

125952 0 1 0 0 0

125953 1 0 0 0 0

125954 0 1 0 0 0

125955 0 1 0 0 0

125956 0 1 0 0 0

125957 0 1 0 0 0

125958 1 0 0 0 0

125959 0 1 0 0 0

125960 0 1 0 0 0

125961 0 1 0 0 0

125962 0 1 0 0 0

125963 0 1 0 0 0

125964 1 0 0 0 0

125965 0 1 0 0 0

125966 1 0 0 0 0

125967 0 1 0 0 0

125968 1 0 0 0 0

125969 0 1 0 0 0

125970 0 1 0 0 0

125971 1 0 0 0 0

125972 0 1 0 0 0

[125973 rows x 5 columns]

the output of y_test:

print(y_test)

apache2 dos httptunnel mailbomb mscan named normal probe

0 0 1 0 0 0 0 0 0

1 0 1 0 0 0 0 0 0

2 0 0 0 0 0 0 1 0

3 0 0 0 0 0 0 0 0

4 0 0 0 0 1 0 0 0

5 0 0 0 0 0 0 1 0

6 0 0 0 0 0 0 1 0

7 0 0 0 0 0 0 0 0

8 0 0 0 0 0 0 1 0

9 0 0 0 0 0 0 0 0

10 0 0 0 0 1 0 0 0

11 0 0 0 0 0 0 1 0

12 0 1 0 0 0 0 0 0

13 0 1 0 0 0 0 0 0

14 0 0 0 0 0 0 1 0

15 0 0 0 0 0 0 1 0

16 0 0 0 0 0 0 1 0

17 0 0 0 0 0 0 1 0

18 0 0 0 0 0 0 1 0

19 0 1 0 0 0 0 0 0

20 0 1 0 0 0 0 0 0

21 0 0 0 0 1 0 0 0

22 0 0 0 0 0 0 1 0

23 0 0 0 0 0 0 1 0

24 0 1 0 0 0 0 0 0

25 0 1 0 0 0 0 0 0

26 0 0 0 0 0 0 1 0

27 0 0 0 0 0 0 1 0

28 0 1 0 0 0 0 0 0

29 0 0 0 0 0 0 1 0

… … … … … … … …

22514 0 0 0 0 0 0 1 0

22515 1 0 0 0 0 0 0 0

22516 0 0 0 0 0 0 1 0

22517 0 0 0 0 0 0 0 0

22518 0 0 0 0 0 0 1 0

22519 0 0 0 0 0 0 0 0

22520 0 0 0 0 0 0 0 1

22521 0 0 0 0 0 0 0 1

22522 0 1 0 0 0 0 0 0

22523 0 0 0 0 0 0 1 0

22524 0 0 0 0 0 0 0 0

22525 1 0 0 0 0 0 0 0

22526 0 0 0 0 0 0 1 0

22527 0 0 0 0 0 0 1 0

22528 0 1 0 0 0 0 0 0

22529 0 0 0 0 0 0 1 0

22530 0 1 0 0 0 0 0 0

22531 0 1 0 0 0 0 0 0

22532 0 0 0 0 0 0 1 0

22533 0 0 0 0 0 0 1 0

22534 0 1 0 0 0 0 0 0

22535 0 0 0 0 0 0 1 0

22536 0 1 0 0 0 0 0 0

22537 0 0 0 1 0 0 0 0

22538 0 1 0 0 0 0 0 0

22539 0 0 0 0 0 0 1 0

22540 0 0 0 0 0 0 1 0

22541 0 1 0 0 0 0 0 0

22542 0 0 0 0 0 0 1 0

22543 0 0 0 0 1 0 0 0

processtable ps ... sendmail snmpgetattack snmpguess sqlattack \

0 0 0 … 0 0 0 0

1 0 0 … 0 0 0 0

2 0 0 … 0 0 0 0

3 0 0 … 0 0 0 0

4 0 0 … 0 0 0 0

5 0 0 … 0 0 0 0

6 0 0 … 0 0 0 0

7 0 0 … 0 0 0 0

8 0 0 … 0 0 0 0

9 0 0 … 0 0 0 0

10 0 0 … 0 0 0 0

11 0 0 … 0 0 0 0

12 0 0 … 0 0 0 0

13 0 0 … 0 0 0 0

14 0 0 … 0 0 0 0

15 0 0 … 0 0 0 0

16 0 0 … 0 0 0 0

17 0 0 … 0 0 0 0

18 0 0 … 0 0 0 0

19 0 0 … 0 0 0 0

20 0 0 … 0 0 0 0

21 0 0 … 0 0 0 0

22 0 0 … 0 0 0 0

23 0 0 … 0 0 0 0

24 0 0 … 0 0 0 0

25 0 0 … 0 0 0 0

26 0 0 … 0 0 0 0

27 0 0 … 0 0 0 0

28 0 0 … 0 0 0 0

29 0 0 … 0 0 0 0

… … … … … … …

22514 0 0 … 0 0 0 0

22515 0 0 … 0 0 0 0

22516 0 0 … 0 0 0 0

22517 1 0 … 0 0 0 0

22518 0 0 … 0 0 0 0

22519 1 0 … 0 0 0 0

22520 0 0 … 0 0 0 0

22521 0 0 … 0 0 0 0

22522 0 0 … 0 0 0 0

22523 0 0 … 0 0 0 0

22524 0 0 … 0 0 0 0

22525 0 0 … 0 0 0 0

22526 0 0 … 0 0 0 0

22527 0 0 … 0 0 0 0

22528 0 0 … 0 0 0 0

22529 0 0 … 0 0 0 0

22530 0 0 … 0 0 0 0

22531 0 0 … 0 0 0 0

22532 0 0 … 0 0 0 0

22533 0 0 … 0 0 0 0

22534 0 0 … 0 0 0 0

22535 0 0 … 0 0 0 0

22536 0 0 … 0 0 0 0

22537 0 0 … 0 0 0 0

22538 0 0 … 0 0 0 0

22539 0 0 … 0 0 0 0

22540 0 0 … 0 0 0 0

22541 0 0 … 0 0 0 0

22542 0 0 … 0 0 0 0

22543 0 0 … 0 0 0 0

u2r udpstorm worm xlock xsnoop xterm

0 0 0 0 0 0 0

1 0 0 0 0 0 0

2 0 0 0 0 0 0

3 0 0 0 0 0 0

4 0 0 0 0 0 0

5 0 0 0 0 0 0

6 0 0 0 0 0 0

7 0 0 0 0 0 0

8 0 0 0 0 0 0

9 0 0 0 0 0 0

10 0 0 0 0 0 0

11 0 0 0 0 0 0

12 0 0 0 0 0 0

13 0 0 0 0 0 0

14 0 0 0 0 0 0

15 0 0 0 0 0 0

16 0 0 0 0 0 0

17 0 0 0 0 0 0

18 0 0 0 0 0 0

19 0 0 0 0 0 0

20 0 0 0 0 0 0

21 0 0 0 0 0 0

22 0 0 0 0 0 0

23 0 0 0 0 0 0

24 0 0 0 0 0 0

25 0 0 0 0 0 0

26 0 0 0 0 0 0

27 0 0 0 0 0 0

28 0 0 0 0 0 0

29 0 0 0 0 0 0

… … … … … …

22514 0 0 0 0 0 0

22515 0 0 0 0 0 0

22516 0 0 0 0 0 0

22517 0 0 0 0 0 0

22518 0 0 0 0 0 0

22519 0 0 0 0 0 0

22520 0 0 0 0 0 0

22521 0 0 0 0 0 0

22522 0 0 0 0 0 0

22523 0 0 0 0 0 0

22524 1 0 0 0 0 0

22525 0 0 0 0 0 0

22526 0 0 0 0 0 0

22527 0 0 0 0 0 0

22528 0 0 0 0 0 0

22529 0 0 0 0 0 0

22530 0 0 0 0 0 0

22531 0 0 0 0 0 0

22532 0 0 0 0 0 0

22533 0 0 0 0 0 0

22534 0 0 0 0 0 0

22535 0 0 0 0 0 0

22536 0 0 0 0 0 0

22537 0 0 0 0 0 0

22538 0 0 0 0 0 0

22539 0 0 0 0 0 0

22540 0 0 0 0 0 0

22541 0 0 0 0 0 0

22542 0 0 0 0 0 0

22543 0 0 0 0 0 0

[22544 rows x 22 columns]

best regards