Hi,

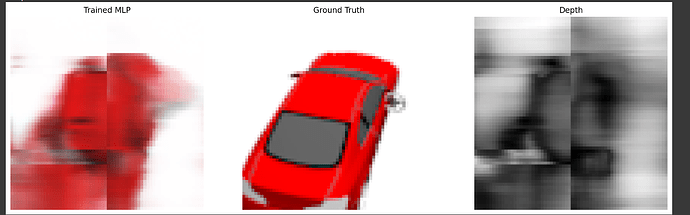

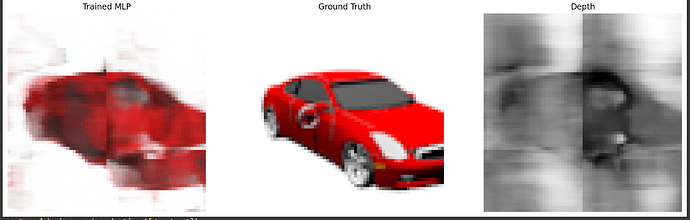

I understand that my question is too ambiguous but I couldn’t help. I’m working on 3d reconstruction. Basically, I have latent codes. I train two models: a model that generates my latent code which represents a 3d scene, and a model that renders the image out of latent code.

But my output image is split in two. Can you guess why or when would this happen? I wanted to post my code but as I don’t know which part is wrong and the code is super long, I thought it would be better to ask for some guesses and figure out myself what is causing the issue.

EDIT

OMG. Unbelievably, I somehow fixed it. lol

values.shape = [4, 128, 1, 1, 409600]

feature_size = 128

bs (batch_size) = 4

I need to make [bs, -1, feature_size]

before:

values = values.squeeze().reshape(bs, -1, feature_size)

after:

values = values.squeeze().permute(0, 2, 1)

I think the before version was causing that issue. I thought those two versions work the same?