Hello!!!

Im new to pytorch and im trying to do transfer learning over a faster rcnn torchvision model.

I read several tutorials and tried for days but i allways get same result but im not able to find whats wrong, sure more experiece people will detect error easy.

I have two classes (cat,dog), cat is class 1 and dog is class 2. so according doc i create my model as follow

num_clases = 3 # two classes plus background

model = fasterrcnn_resnet50_fpn(pretrained=True)

in_features = model.roi_heads.box_predictor.cls_score.in_features

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_clases)

I have created my dataset, later a dataloader… etc etc and i also printed some random dataset and dataloader entries for sanity check… all looks ok.

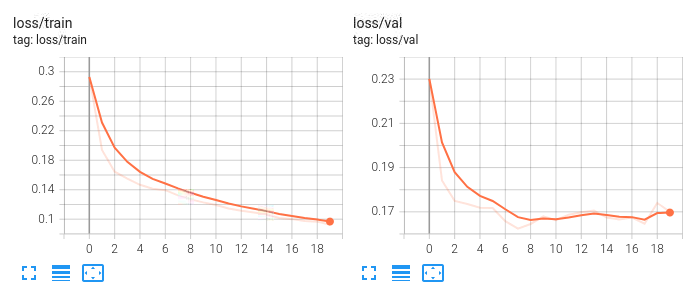

I also plotted to tensorboard train loss and test loss and train for 20 epochs… sure can be better

Save the model

torch.save(model.state_dict(), f"models/"+MODEL_NAME+"/"+MODEL_NAME+"_final.pth")

And when i do inference, any object detected is a cat or a dog, i mean if i use a cow image, model says its a cat or a dog. So it works perfect with any image of test set but as soon as i use any image that not contains a dog or a cat… problem appears as any object will be cat or dog.

Is like any bbox that comes from the backbone will be setted as one of the two classes, but as i read it should have a class 0 to put all background (anything that is not a cat or a dog).

Sure is something dumb but im not able to find the cause.

Any help will be wellcome!!!

Thanks a lot