The printed out the data.size it comes out to be [1,3,128,128],

I printed the training input(x_train) ,that too comes out to be [1,3,128,128]

The model def is

class double_conv(nn.Module):

'''(conv => BN => ReLU) * 2'''

def __init__(self, in_ch, out_ch):

super(double_conv, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(in_ch, out_ch, 3, padding=1),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch, out_ch, 3, padding=1),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True)

)

def forward(self, x):

x = self.conv(x)

return x

class inconv(nn.Module):

def __init__(self, in_ch, out_ch):

super(inconv, self).__init__()

self.conv = double_conv(in_ch, out_ch)

def forward(self, x):

x = self.conv(x)

return x

class down(nn.Module):

def __init__(self, in_ch, out_ch):

super(down, self).__init__()

self.mpconv = nn.Sequential(

nn.MaxPool2d(2),

double_conv(in_ch, out_ch)

)

def forward(self, x):

x = self.mpconv(x)

return x

class up(nn.Module):

def __init__(self, in_ch, out_ch, bilinear=True):

super(up, self).__init__()

if bilinear:

self.up = nn.Upsample(scale_factor=2)

else:

self.up = nn.ConvTranspose2d(in_ch, out_ch, 2, stride=2)

self.conv = double_conv(in_ch, out_ch)

def forward(self, x1, x2):

x1 = self.up(x1)

diffX = x1.size()[2] - x2.size()[2]

diffY = x1.size()[3] - x2.size()[3]

x2 = F.pad(x2, (diffX // 2, int(diffX / 2),

diffY // 2, int(diffY / 2)))

x = t.cat([x2, x1], dim=1)

x = self.conv(x)

return x

class outconv(nn.Module):

def __init__(self, in_ch, out_ch):

super(outconv, self).__init__()

self.conv = nn.Conv2d(in_ch, out_ch, 1)

def forward(self, x):

x = self.conv(x)

return x

class UNet(nn.Module):

def __init__(self, n_channels, n_classes):

super(UNet, self).__init__()

self.inc = inconv(n_channels, 64)

self.down1 = down(64, 128)

self.down2 = down(128, 256)

self.down3 = down(256, 512)

self.down4 = down(512, 512)

self.up1 = up(1024, 256)

self.up2 = up(512, 128)

self.up3 = up(256, 64)

self.up4 = up(128, 64)

self.outc = outconv(64, n_classes)

def forward(self, x):

x1 = self.inc(x)

x2 = self.down1(x1)

x3 = self.down2(x2)

x4 = self.down3(x3)

x5 = self.down4(x4)

x = self.up1(x5, x4)

x = self.up2(x, x3)

x = self.up3(x, x2)

x = self.up4(x, x1)

x = self.outc(x)

x = t.nn.functional.sigmoid(x)

#x = t.nn.functional.softmax(x)

return x

def soft_dice_loss(inputs, targets):

num = targets.size(0)

m1 = inputs.view(num,-1)

m2 = targets.view(num,-1)

intersection = (m1 * m2)

score = 2. * (intersection.sum(1)+1) / (m1.sum(1) + m2.sum(1)+1)

score = 1 - score.sum()/num

return score

model = UNet(3,1).cuda()

optimizer = t.optim.Adam(model.parameters(),lr = 1e-3)

#configure("runs/run-1", flush_secs=2)

for epoch in range(1):

for x_train, y_train in tqdm(dataloader):

x_train = t.autograd.Variable(x_train).cuda()

y_train = t.autograd.Variable(y_train).cuda()

optimizer.zero_grad()

o = model.forward(x_train)

loss = soft_dice_loss(o, y_train)

loss.backward()

optimizer.step()

log_value('TrainLoss', loss, epoch)

print(o.size())

the testing part is

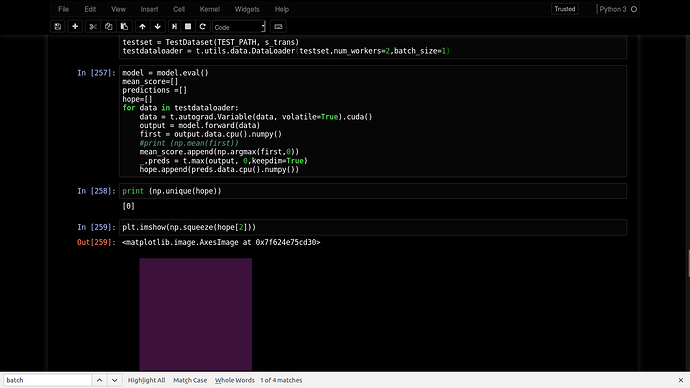

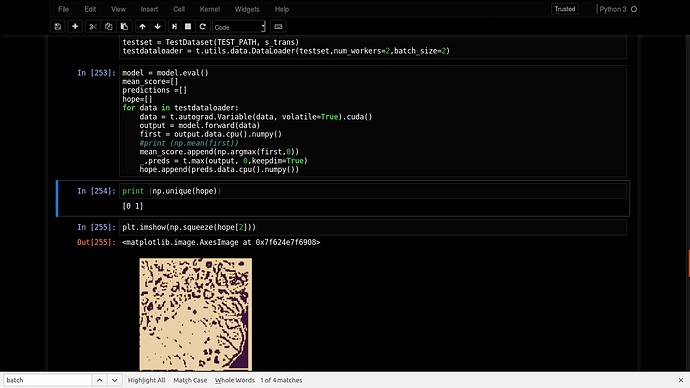

model = model.eval()

mean_score=[]

predictions =[]

hope=[]

for data in testdataloader:

data = t.autograd.Variable(data, volatile=True).cuda()

output = model(data)

first = output.data.cpu().numpy()

#print (np.mean(first))

mean_score.append(np.argmax(first,0))

_,preds = t.max(output, 0,keepdim=True)

hope.append(preds.data.cpu().numpy())

Let me know if you have any suggestions,Thanks