i am training model on cats vs dogs dataset.images are 50x50x3, then i view them to 3x50x50 and train model.loss funciton is MSE, optimizer Adam, and actiovation function relu.it also has convolutional layers.model returns 2d vector which is calculated by softmax function(it is from functional).it is not learning at all, i mean on test set it outputs same values all the time, what the problem might be?

Hi,

If you could post code to reproduce your problem, it would be easier for us to find the problem.

Also, with images, when you want to change the order of the dimensions, you should use the permute function instead of the view function. This small example shows the difference between the two:

x = torch.rand(size=(4, 4, 3), dtype=torch.float32)

print(x)

# tensor([[[0.0136, 0.6666, 0.5414],

# [0.8499, 0.6427, 0.8142],

# [0.9565, 0.4752, 0.0079],

# [0.5476, 0.2150, 0.9677]],

#

# [[0.9483, 0.6250, 0.1009],

# [0.8543, 0.1606, 0.0530],

# [0.7296, 0.6276, 0.1459],

# [0.0894, 0.5672, 0.2201]],

#

# [[0.1019, 0.4461, 0.1278],

# [0.6479, 0.5163, 0.2692],

# [0.3704, 0.4845, 0.1759],

# [0.2597, 0.6056, 0.2702]],

#

# [[0.4689, 0.0948, 0.3193],

# [0.0574, 0.1467, 0.2993],

# [0.8013, 0.7025, 0.2048],

# [0.0281, 0.9036, 0.0024]]])

y = x.view(3, 4, 4)

z = x.permute(dims=(2, 0, 1))

print(y)

# tensor([[[0.0136, 0.6666, 0.5414, 0.8499],

# [0.6427, 0.8142, 0.9565, 0.4752],

# [0.0079, 0.5476, 0.2150, 0.9677],

# [0.9483, 0.6250, 0.1009, 0.8543]],

#

# [[0.1606, 0.0530, 0.7296, 0.6276],

# [0.1459, 0.0894, 0.5672, 0.2201],

# [0.1019, 0.4461, 0.1278, 0.6479],

# [0.5163, 0.2692, 0.3704, 0.4845]],

#

# [[0.1759, 0.2597, 0.6056, 0.2702],

# [0.4689, 0.0948, 0.3193, 0.0574],

# [0.1467, 0.2993, 0.8013, 0.7025],

# [0.2048, 0.0281, 0.9036, 0.0024]]])

print(z)

# tensor([[[0.0136, 0.8499, 0.9565, 0.5476],

# [0.9483, 0.8543, 0.7296, 0.0894],

# [0.1019, 0.6479, 0.3704, 0.2597],

# [0.4689, 0.0574, 0.8013, 0.0281]],

#

# [[0.6666, 0.6427, 0.4752, 0.2150],

# [0.6250, 0.1606, 0.6276, 0.5672],

# [0.4461, 0.5163, 0.4845, 0.6056],

# [0.0948, 0.1467, 0.7025, 0.9036]],

#

# [[0.5414, 0.8142, 0.0079, 0.9677],

# [0.1009, 0.0530, 0.1459, 0.2201],

# [0.1278, 0.2692, 0.1759, 0.2702],

# [0.3193, 0.2993, 0.2048, 0.0024]]])

thanks, so this is code:

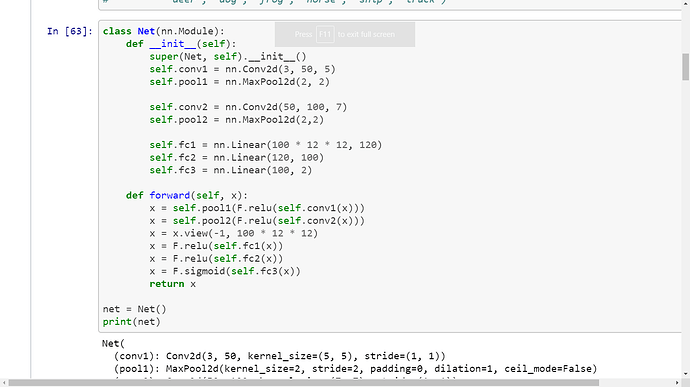

class Net(nn.Module):

def __init__(self):

super().__init__()

self.conv1=nn.Conv2d(3,32,5)

self.conv2=nn.Conv2d(32,64,5)

self.conv3=nn.Conv2d(64,128,5)

self.fc1=nn.Linear(128*38*38,100)

self.fc2=nn.Linear(100,2)

def forward(self, x):

x=F.relu(self.conv1(x))

x=F.relu(self.conv2(x))

x=F.relu(self.conv3(x))

x=x.view(-1,128*38*38)

x=F.relu(self.fc1(x))

x=self.fc2(x)

return torch.sigmoid(x)

this is my training:

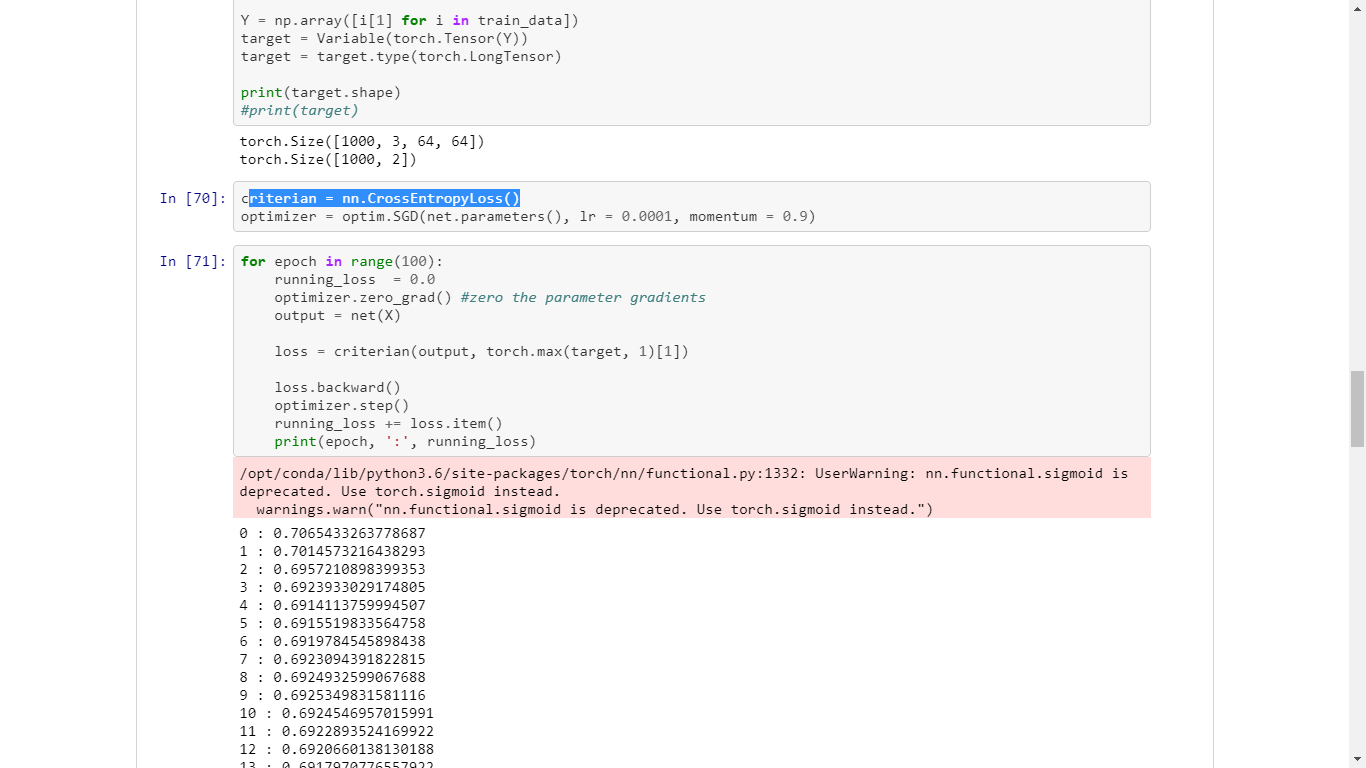

BATCH_SIZE =1

EPOCHS = 5

optimizer = optim.Adam(net.parameters(), lr=0.001)

criterion=nn.CrossEntropyLoss()

for epoch in range(EPOCHS):

for i in tqdm(range(0, len(train_X), BATCH_SIZE)):

batch_X = train_X[i:i+BATCH_SIZE].permute(dims=(0,3, 1, 2))

batch_y = train_y[i:i+BATCH_SIZE]

batch_y=[x[0] for x in batch_y]

batch_y=(torch.Tensor(batch_y).type(torch.LongTensor)).to(device)

net.zero_grad()

outputs = net(batch_X)

loss = criterion(outputs, batch_y)

loss.backward()

optimizer.step()

additional information.above X,Y is:

X = torch.Tensor([i[0] for i in train_data])

X = X/255.0

y = torch.Tensor([i[1] for i in train_data])

X=X.to(device)

y=y.to(device)

i checked and sigmoid is compatible with cross entropy, also i corrected code, according to your suggestion(use permute), i used permute to pass whole batch to net, i keep first dimention the same and changing only last(bebcause input of network should be 3x50x50)

nn.CrossEntropyLoss expects logits, not sigmoid outputs, so you should remove this activation layer.

ok thanks, it is my fault after many failures i decidet to copy entire network from this guy:

but i have got same result  by removing sigmoid,thanks anyway.

by removing sigmoid,thanks anyway.

Or the code uses nn.BCELoss. Could you check that?

Thanks for clarification.

In that case, the model might somehoe work, but model outputs are not in an expected range and thus you might see some side effects, e.g. vanishing gradients.