The pytorch version is 1.12.

I use ‘for xxx in f:’ to load data in my code. and N cards are used to train model, so technically torch will start up N processes to load data. but the file IO shares memory so the ‘f’ is called over and over again by these data-loading processes. All the data-loading processes get into dead loops and can’t get the data right.

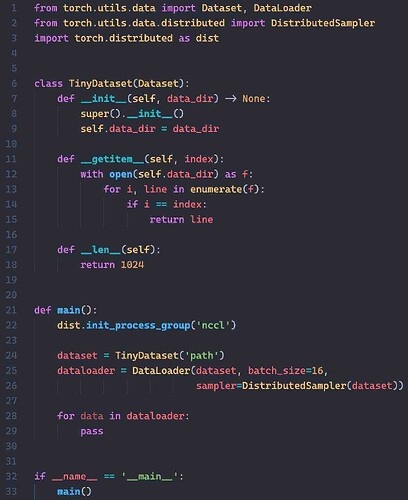

I wrote a small concept of my code like this: