I have been working on a classifying problem using a model similar to DETR. Recently I found my model unstable and random seed has been set. During different times of training with the same hyperparameters and same epochs, the model behaved so differently that I think there must be problems in my code. The major problems are as follows:

-

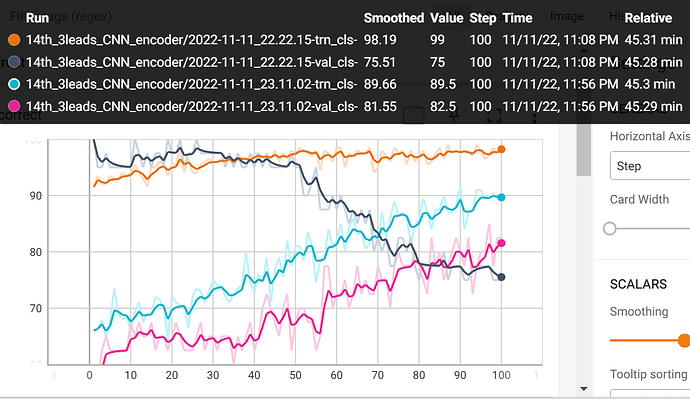

bad reproducibility (totally different trend regarding the accuracy just like the picture below, the y axis represents the times of training epochs and the x axis represents accuracy.)

orange line: training accuracy during the 1st time of training for 100 epochs

dark blue: validation accuracy during the 1st time of training for 100 epochs

light blue: training accuracy during the 2nd time of training for 100 epochs

pink: validation accuracy during the 2nd time of training for 100 epochs

-

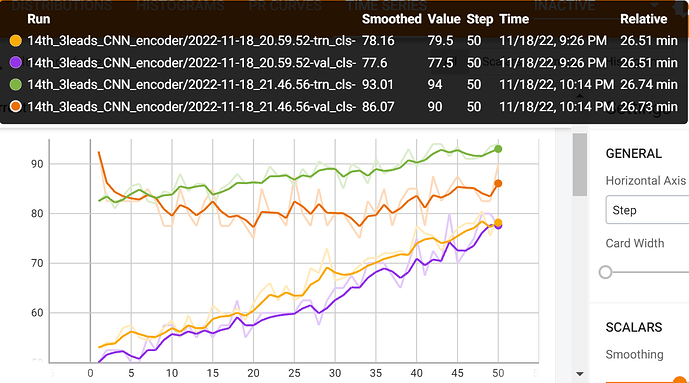

high accuracy at the second or more time of training (using the same parameters, and I always restart the kernel before starting a new round of training)

Does anyone have similar experiences or know what kind of problems might be the cause?? I’m quite new to machine learning and deep learning, so I think I might have missed out some important tips when trying to build a model using Pytorch. Thnaks!!