Hello,

I’m new in this field.

I have some problems.

Description:

I have a dataset consisting of 9 classes. The folder structure is train/images and train/labels.

Each image have several different object (e.g. the first image consists of 2 Cars and 3 Pedestrians).

Problems:

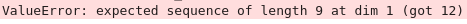

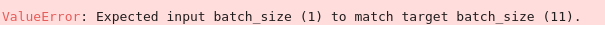

- when the BATCH_SIZE is equal to 1, Dataloader works fine! But when I set the BATCH_SIZE greater than 1, and when I try to use TORCH.TENSOR(LABELS), I got this error:

I think it is due to the length of the labels, which is different in each image!

[[1.0, 1.0, 3.0, 5.0], [0.0, 2.0]]

How can I solve it?

-

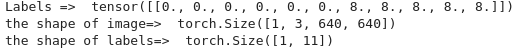

I want to continue with BATCH_SIZE = 1 to describe my another problems; when I to pass the targets to CROSS_ENTROPY_LOSS I get this error:

-

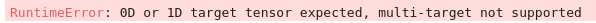

when I use Squeeze(0) to pass targets to my loss function this once I got this error:

Update1;

My Outputs have [1,9] shape

I found that the output shape and the target shape must have same sizes. But my labels have different class indices and different sizes!

How can I solve this problem?

Update2;

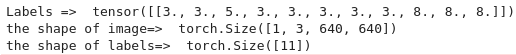

I implent a code that turn labels to this; => e.g. [0., 1., 1., 0., 1., 0., 1., …], with the shape of [1,9].

But this once my loss was the same amount at the end of each epoch!

Is this implementation wrong?

Thank you for your helps and answers.