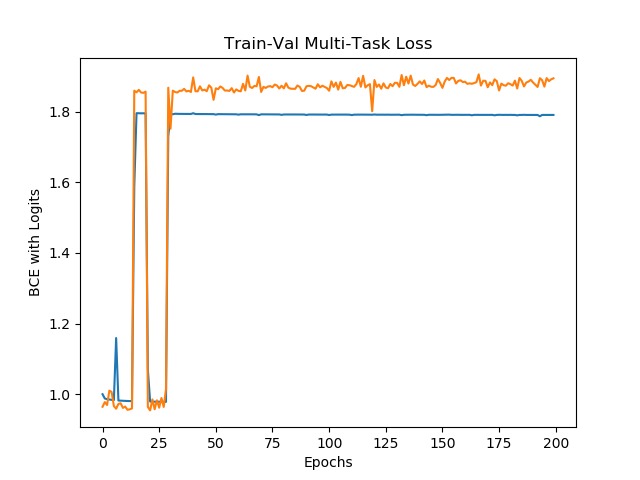

Here, I’ve declared a custom multi-task loss in Pytorch with BCEWithLogitsLoss for the (binary) mask segmentation loss, and BCELoss for the classification loss (I use fully-connected layers and then sigmoid). I weight BCEWithLogitsLoss at 0.9 and BCELoss at 0.1, sum them, and back propagate this summed loss. I’m using the Adam optimiser for minimisation. Please note that the y-axis of the plot is wrong (should be multi-task loss instead of just BCEWithLogits).

However, as seen from the graph, this loss cannot be minimised well. Why do you think this is? How do I solve this problem? Thanks!

Are you using the sigmoid for both layers?

Note that nn.BCEWithLogitsLoss does not expect probabilities, but raw logits.

Could you post the training code, so that we can have a look?

Ah yes you are completely right! I’ll fix that!