Hi, I just started studying pytorch recently, and want to train a model with multi GPUs, so tried an example using DataParallel.

But after the model is assigned to GPUs, the training does not proceed.

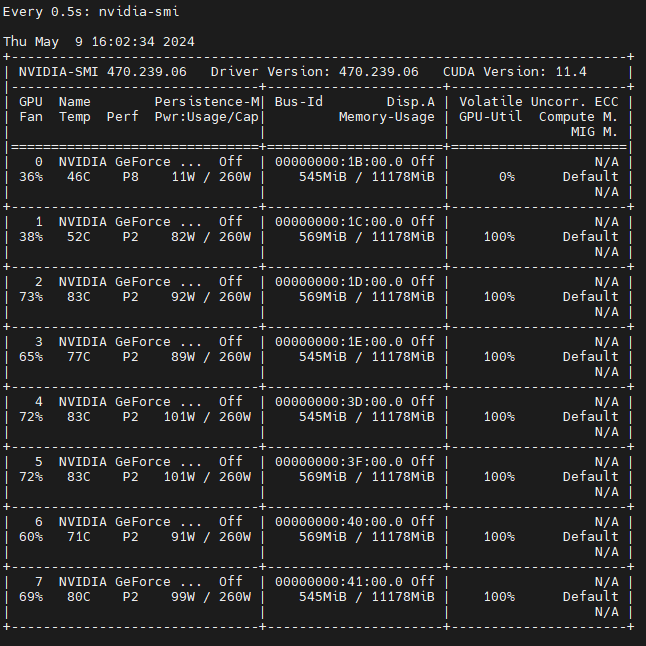

I have 8 GTX 1080Ti GPUs.

This is the example I tried.

Example

I got Let’s use 8 GPUs! message but no more output.

python test.py

Let's use 8 GPUs!

This is my torch version.

pip show torch

Name: torch

Version: 2.2.2+cu118

Is there anything else I need to configure to use DataParallel?