Hi Guys,

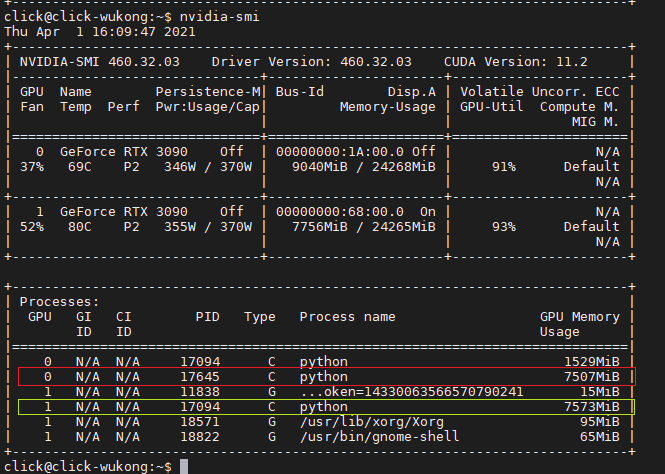

When I run my AI train in multi-GPUs mode, process always stuck (hang) in backward. I traced it into torch interface torch/autograd/init.py line 145 Variable._execution_engine.run_backward. It was blocked process and never return. No matter what I have set up DataParallel or DistributedDataParallel. The console nothing output. I guess something dead-loop in torch/_C.cpython-38-x86_64-linux-gnu.so or further. Have anyone know about this issue or workaround solution?

I compare two mode train (the single GPU and both GPUs), my train is successfully passed when only one GPUs but failed in double. And I collect the arguments of both of them when pass it into Variable.execution_engine.run_backward, they are same.

rt_frames.T = {NameError}name ‘rt_frames’ is not defined

create_graph = {bool} False

grad_tensors = {NoneType} None

grad_tensors = {tuple: 1} tensor(1., device=‘cuda:0’)

grad_variables = {NoneType} None

inputs = {tuple: 0} ()

retain_graph = {bool} False

tensors = {tuple: 1} tensor(0.6899, device=‘cuda:0’, grad_fn=)

And I also test GPU + CUDA with p2pBandwithLatencyTest and the result is OK.

Would anyone do me a favor on having a look at this issue?

My hardware environment is CPU Intel® Core™ i9-7900X CPU @ 3.30GHz, Memory 8G*8 DDR3,

GPU rtx3090

software environment is Ubuntu18.04TLS, RTX3090 Driver460.56 + cuda_11.1.TC455_06.29069683_0 + Tensorflow 2.4.1.+ Pytorch1.8.1(cu111).

You could check dmesg for Xid errors and see, if a GPU might be dropped due to some issues such as a PSU, which might be too weak to drive two 3090s.

Hi Ptrblck,

dmesg show nothing about Xid error. and I check PSU power, all items are unknow

dmidecode 3.1

Getting SMBIOS data from sysfs.

SMBIOS 3.2.0 present.

SMBIOS implementations newer than version 3.1.1 are not

fully supported by this version of dmidecode.

Handle 0x0048, DMI type 39, 22 bytes

System Power Supply

Power Unit Group: 1

Location: To Be Filled By O.E.M.

Name: To Be Filled By O.E.M.

Manufacturer: To Be Filled By O.E.M.

Serial Number: To Be Filled By O.E.M.

Asset Tag: To Be Filled By O.E.M.

Model Part Number: To Be Filled By O.E.M.

Revision: To Be Filled By O.E.M.

Max Power Capacity: Unknown

Status: Present, OK

Type: Switching

Input Voltage Range Switching: Auto-switch

Plugged: Yes

Hot Replaceable: No

Input Voltage Probe Handle: 0x0044

Cooling Device Handle: 0x0046

Input Current Probe Handle: 0x0047

I just did a test that AI training script copied in 2 and 1 cuda:0 and the other file is cuda:1. Then I launched them separately. They can successfully run themselves in their own GPU card. So I think the PSU is not a key problem.

Finally, I found the root cause, My PC is too hot to launch 2 GPUs. After I take off the chassis cover. It work!