Hi all,

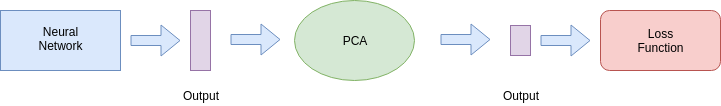

I have an architecture where I put the outputs of a neural network through a PCA (Incremental PCA from scikit-learn) and then compute the loss function:

Of course when I compute the PCA, the PyTorch tensors are converted to NumPy arrays and lose the gradient context information.

My goal is to update the weights of the neural network with respect to the loss function. Is there a straightforward way of achieving this?

Thanks!