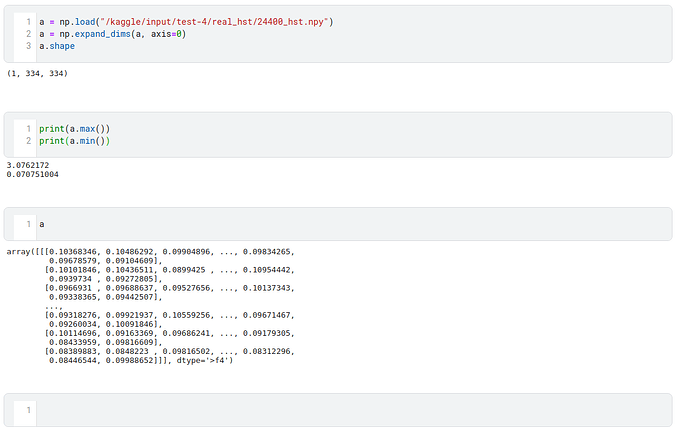

I am doing classification task and my images are in .npy format with 1 channel. After loading them in with numpy I realized that the images have values ranging from [0 - some maximum value] but not reaching anywhere close to 255. Also they happen to be in float format. However I am aware that in pytorch image classification models, it is required to rescale the images that usually have values of [0-255] in uint8 type to [0-1] in float type or rescaling can be avoided if the images are already in the right one.

In my case how do I properly scale the images to be used with say a resnet model for example. I have provided an image for your reference