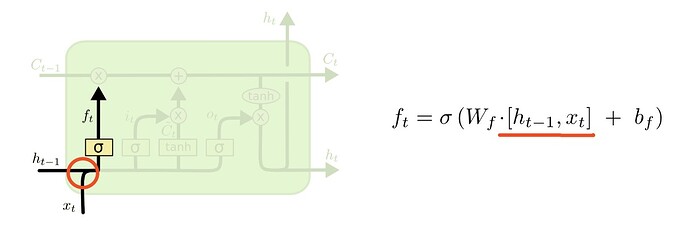

In many papers and blogs(LSTM), the input of forget gate often be described like this picture.

The input seems like the result of a concatenate operation on xt and ht-1. But in the doc of nn.LSTM(nn.LSTM), there is a example like this:

rnn = nn.LSTM(10, 20, 2)

x1 = torch.randn(5, 3, 10)

h0 = torch.randn(2, 3, 20)

c0 = torch.randn(2, 3, 20)

output, (hn, cn) = rnn(x1, (h0, c0))

I notice that the shape is not matched on x1 and h0, they can not be concatenated. But the code runs well. I want to know why.