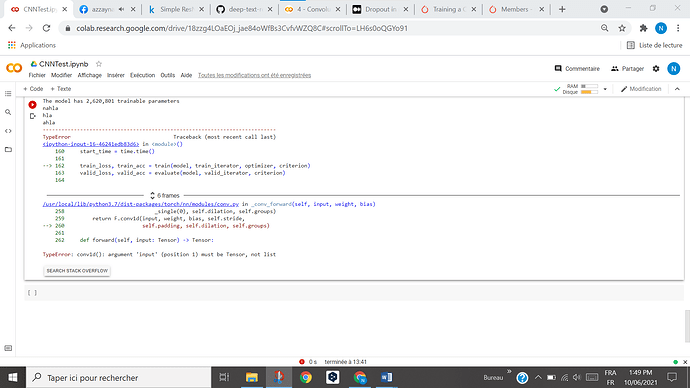

hello i am trying to do CNN with different layers conv and pool but i got this error (on the image) i have to put to the second conv layer result of of first conv pool activation that helps me to do ResNet

here is my code

import torch

from torchtext.legacy import data

from torchtext.legacy import datasets

import random

import numpy as np

SEED = 1234

random.seed(SEED)

np.random.seed(SEED)

torch.manual_seed(SEED)

torch.backends.cudnn.deterministic = True

TEXT = data.Field(tokenize = ‘spacy’,

tokenizer_language = 'en_core_web_sm',

batch_first = True)

LABEL = data.LabelField(dtype = torch.float)

train_data, test_data = datasets.IMDB.splits(TEXT, LABEL)

train_data, valid_data = train_data.split(random_state = random.seed(SEED))

MAX_VOCAB_SIZE = 25_000

TEXT.build_vocab(train_data,

max_size = MAX_VOCAB_SIZE,

vectors = "glove.6B.100d",

unk_init = torch.Tensor.normal_)

LABEL.build_vocab(train_data)

BATCH_SIZE = 64

device = torch.device(‘cuda’ if torch.cuda.is_available() else ‘cpu’)

train_iterator, valid_iterator, test_iterator = data.BucketIterator.splits(

(train_data, valid_data, test_data),

batch_size = BATCH_SIZE,

device = device)

import torch.nn as nn

import torch.nn.functional as F

class CNN1d(nn.Module):

def __init__(self, vocab_size, embedding_dim, n_filters, filter_sizes, output_dim,

dropout, pad_idx):

super().__init__()

self.embedding = nn.Embedding(vocab_size, embedding_dim, padding_idx = pad_idx)

self.convs = nn.ModuleList([

nn.Conv1d(in_channels = embedding_dim,

out_channels = n_filters,

kernel_size = fs)

for fs in filter_sizes

])

self.fc = nn.Linear(len(filter_sizes) * n_filters, output_dim)

self.dropout = nn.Dropout(dropout)

def forward(self, text):

#text = [batch size, sent len]

embedded = self.embedding(text)

#embedded = [batch size, sent len, emb dim]

embedded = embedded.permute(0, 2, 1)

print("nahla")

#embedded = [batch size, emb dim, sent len]

conved1 = [F.relu(conv(embedded)) for conv in self.convs]

print("hla")

#conved_n = [batch size, n_filters, sent len - filter_sizes[n] + 1]

pooled1 = [F.max_pool1d(conv, conv.shape[2]).squeeze(2) for conv in conved1]

print("ahla")

#pooled_n = [batch size, n_filters]

conved2 = [F.relu(conv(pooled1)) for conv in self.convs]

print("nnnnnnahla")

#conved_n = [batch size, n_filters, sent len - filter_sizes[n] + 1]

pooled2 = [F.max_pool1d(conv, conv.shape[2]).squeeze(2) for conv in conved2]

print("nnnahla")

#pooled_n = [batch size, n_filters]

cat = self.dropout(torch.cat((pooled1,pooled2)), dim = 1)

print("nnahla")

#cat = [batch size, n_filters * len(filter_sizes)]

return self.fc(cat)

INPUT_DIM = len(TEXT.vocab)

EMBEDDING_DIM = 100

N_FILTERS = 100

FILTER_SIZES = [3,4,5]

OUTPUT_DIM = 1

DROPOUT = 0.5

PAD_IDX = TEXT.vocab.stoi[TEXT.pad_token]

model = CNN1d(INPUT_DIM, EMBEDDING_DIM, N_FILTERS, FILTER_SIZES, OUTPUT_DIM, DROPOUT, PAD_IDX)

def count_parameters(model):

return sum(p.numel() for p in model.parameters() if p.requires_grad)

print(f’The model has {count_parameters(model):,} trainable parameters’)

pretrained_embeddings = TEXT.vocab.vectors

model.embedding.weight.data.copy_(pretrained_embeddings)

UNK_IDX = TEXT.vocab.stoi[TEXT.unk_token]

model.embedding.weight.data[UNK_IDX] = torch.zeros(EMBEDDING_DIM)

model.embedding.weight.data[PAD_IDX] = torch.zeros(EMBEDDING_DIM)

import torch.optim as optim

optimizer = optim.Adam(model.parameters())

criterion = nn.BCEWithLogitsLoss()

model = model.to(device)

criterion = criterion.to(device)

def binary_accuracy(preds, y):

"""

Returns accuracy per batch, i.e. if you get 8/10 right, this returns 0.8, NOT 8

"""

#round predictions to the closest integer

rounded_preds = torch.round(torch.sigmoid(preds))

correct = (rounded_preds == y).float() #convert into float for division

acc = correct.sum() / len(correct)

return acc

def train(model, iterator, optimizer, criterion):

epoch_loss = 0

epoch_acc = 0

model.train()

for batch in iterator:

optimizer.zero_grad()

predictions = model(batch.text).squeeze(1)

loss = criterion(predictions, batch.label)

acc = binary_accuracy(predictions, batch.label)

loss.backward()

optimizer.step()

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / len(iterator), epoch_acc / len(iterator)

import time

def epoch_time(start_time, end_time):

elapsed_time = end_time - start_time

elapsed_mins = int(elapsed_time / 60)

elapsed_secs = int(elapsed_time - (elapsed_mins * 60))

return elapsed_mins, elapsed_secs

def evaluate(model, iterator, criterion):

epoch_loss = 0

epoch_acc = 0

model.eval()

with torch.no_grad():

for batch in iterator:

predictions = model(batch.text).squeeze(1)

loss = criterion(predictions, batch.label)

acc = binary_accuracy(predictions, batch.label)

epoch_loss += loss.item()

epoch_acc += acc.item()

return epoch_loss / len(iterator), epoch_acc / len(iterator)

N_EPOCHS = 5

best_valid_loss = float(‘inf’)

for epoch in range(N_EPOCHS):

start_time = time.time()

train_loss, train_acc = train(model, train_iterator, optimizer, criterion)

valid_loss, valid_acc = evaluate(model, valid_iterator, criterion)

end_time = time.time()

epoch_mins, epoch_secs = epoch_time(start_time, end_time)

if valid_loss < best_valid_loss:

best_valid_loss = valid_loss

torch.save(model.state_dict(), 'tut4-model.pt')

print(f'Epoch: {epoch+1:02} | Epoch Time: {epoch_mins}m {epoch_secs}s')

print(f'\tTrain Loss: {train_loss:.3f} | Train Acc: {train_acc*100:.2f}%')

print(f'\t Val. Loss: {valid_loss:.3f} | Val. Acc: {valid_acc*100:.2f}%')