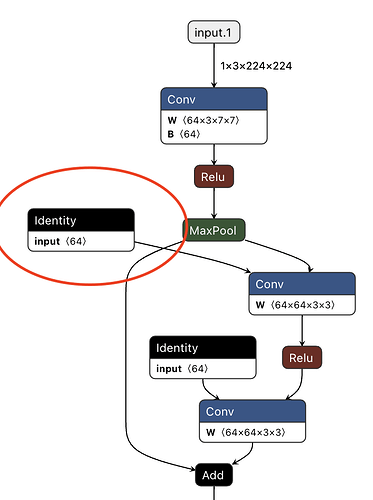

Pytorch 1.13 APIs for onnx export seem to generate onnx graphs that are incompatible with TensorRT conversion tools we use internally. The bias vectors seem to be added as an input to conv nodes instead of being added as an initializer. For example: Torchvision resnet18 when exported to onnx using torch.onnx.export results in the following graph:

Pytorch 1.13

The bias nodes are initialized as part of Conv operator in Pytorch.1.11

Using Pytorch1.13 onnx graph for TensorRT conversion results in the following error:

Errors out with [TRT] ModelImporter.cpp:779: ERROR: builtin_op_importers.cpp:647 In function importConv: [8] Assertion failed: inputs.at(2).is_weights() && "The bias tensor is required to be an initializer for the Conv operator."

Code to generate onnx graph:

import torch

import torch.onnx as onnx

import torchvision.models as models

resnet18 = models.resnet18()

x = torch.randn(1, 3, 224, 224, requires_grad=True)

onnx_file = "./resnet18.onnx"

onnx.export(resnet18, x, onnx_file)

Is this a known regression?