I am trying to upgrade torch 1.8.1 to use CUDA11.1, but I found 2 issues compared with the cuda10.2 one:

-

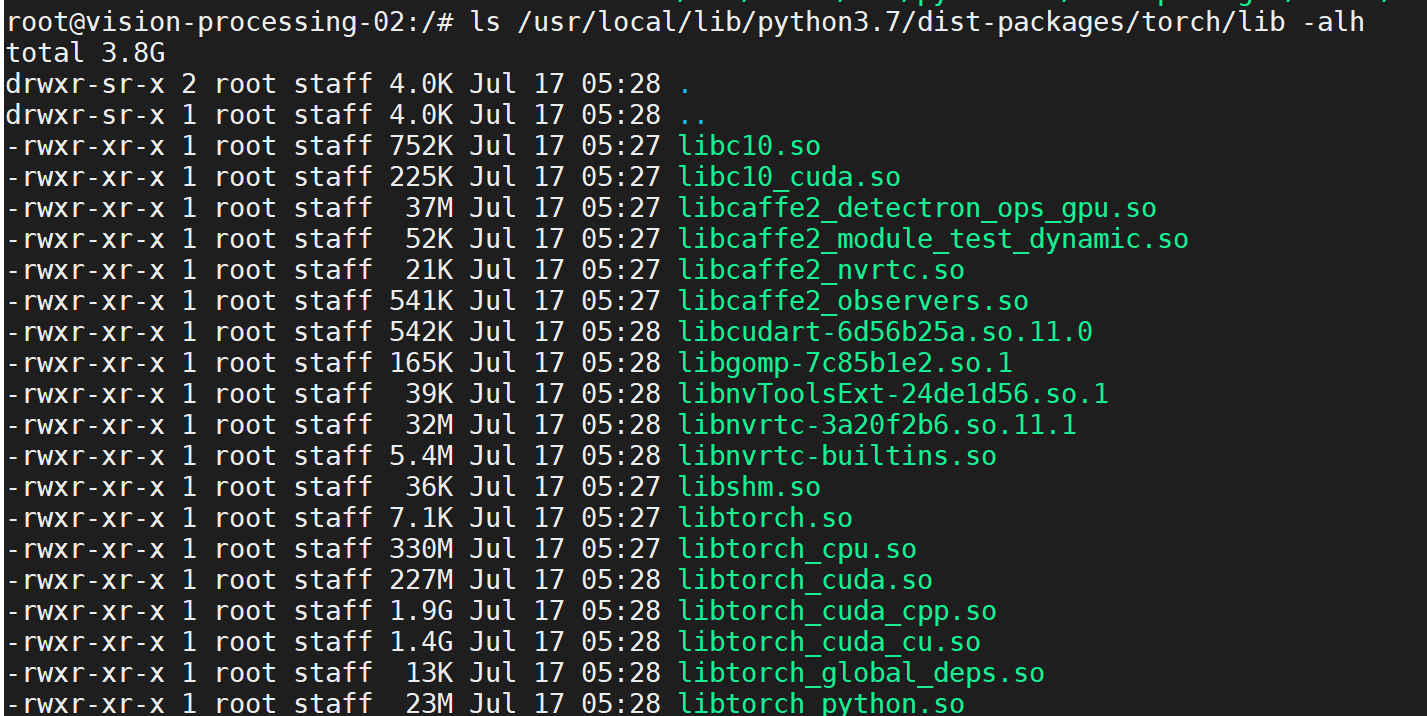

The package Size

- The cuda10.2 one is about 900M, while the cuda11.1 one is about 1900M. Is this expected?

-

Runtime memory usage when no CUDA related function is called

-

I tested by running

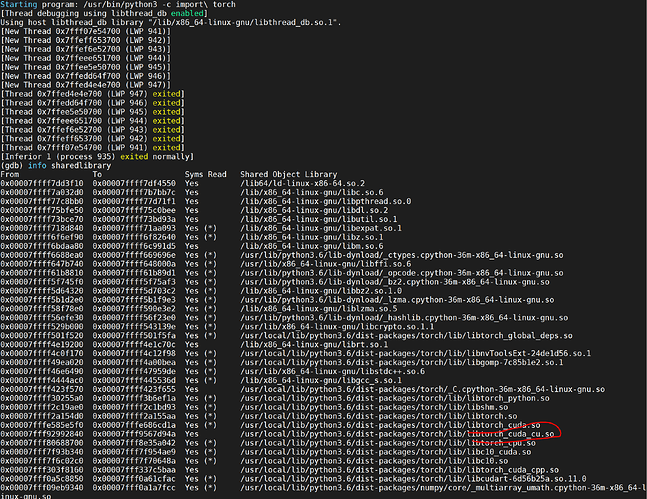

python3 -c 'import torch'for both version. The memory usage had big difference. For cuda10.2 version, it only uses about 180M, but for cuda11.1, it uses about 1.0G. Below is the loaded library for each version in gdb, looks like for cuda11.1 one, even no cuda related function is called, it will still load those cuda kernels like libtorch_cuda_cu.so. Is this an issue?For cuda11.1 one:

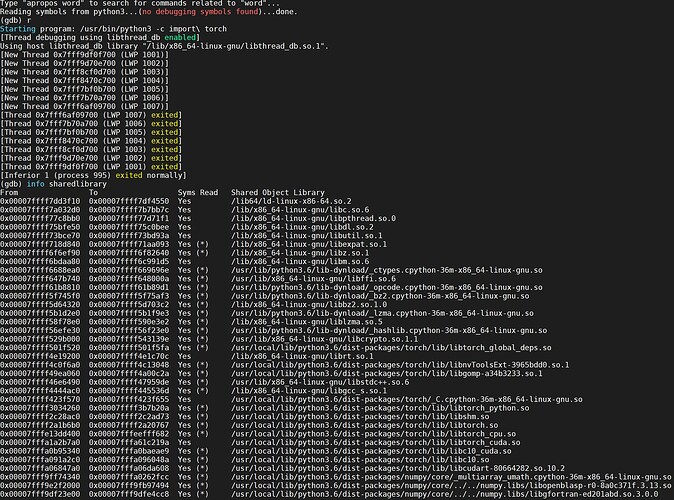

For cuda10.2 one:

-

BTW, the cuda10.2 one is installed with pip install torch==1.8.1, while the cuda11.1 one is installed with pip install torch==1.8.1+cu111 -f https://download.pytorch.org/whl/lts/1.8/torch_lts.html.