Hi I am getting out of memory error while training ResNet18. So I tried to use pytorch automatic mixed precision but I get errors like amp.GradScalar/amp.autocast not an attribute. Importing amp doesn’t raise any error though. I have googled the error but could not resolve it. If someone could please help?

You have a typo in GradScalar as it should be torch.cuda.amp.GradScaler.

In case you are trying to use it from the torch.amp namespace, note that it might not be available since the CPU uses bfloat16 as the lower precision dtype and thus does not use gradient scaling.

Sorry for the typo, it’s just here. I am now even confused if the memory error I am getting can even be resolved using this. the memory error is written like this “Memory cgroup out of memory”. I am not familiar with cgroup. and I can’t even find the cgconfig.conf file. I am working on a remote server through bash in Visual Studio Code.

I would guess your cgroup is running out of host RAM and it’s unrelated to any GPU memory usage.

By host RAM do you mean server RAM? Or something else? I am sorry I am very new at this kind f thing. I should also mention that reducing the batch-size doesn’t make any difference, the error is raised at exact same iteration.

Yes, I meant the CPU RAM. I don’t know which setup you are using, but you would have to check if a cgroup is created which limits the RAM usage. Also just check your RAM via e.g. htop and see at which point your script crashes.

Okay let me look at these things. Thank you.

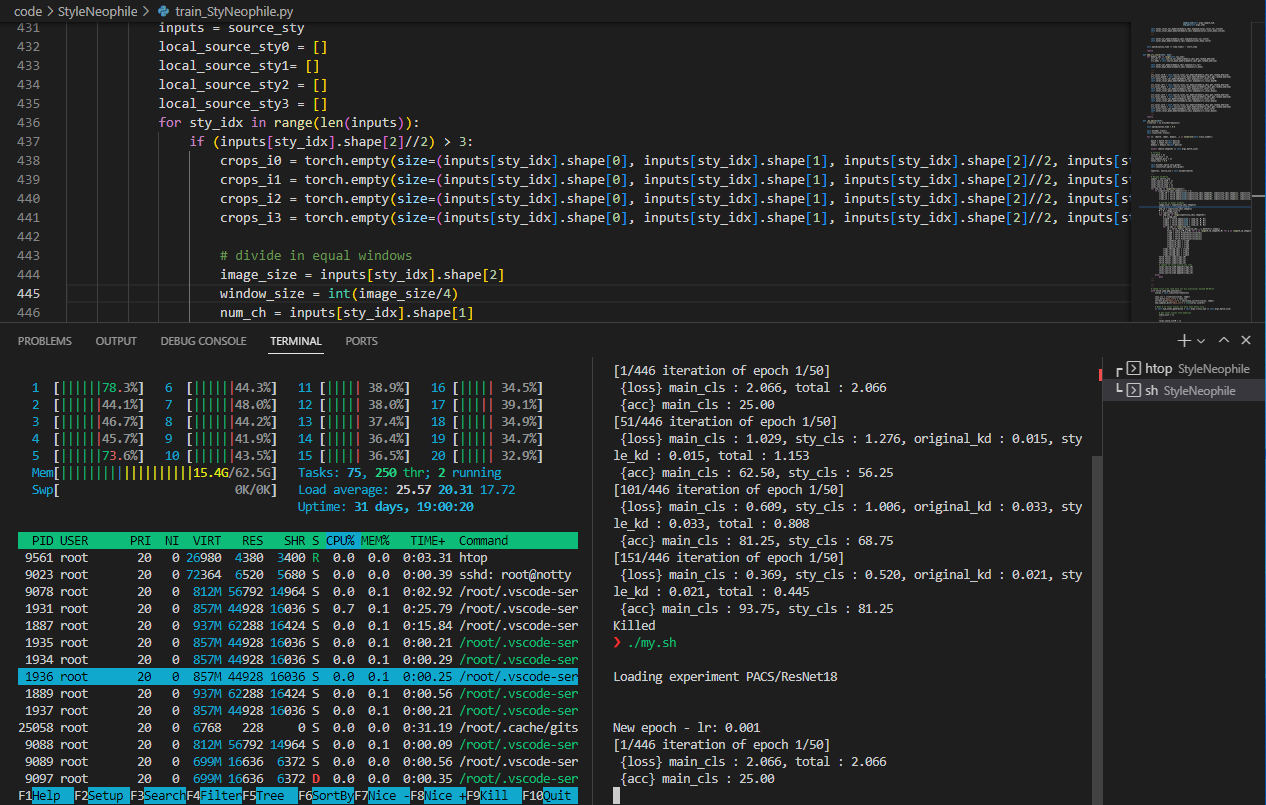

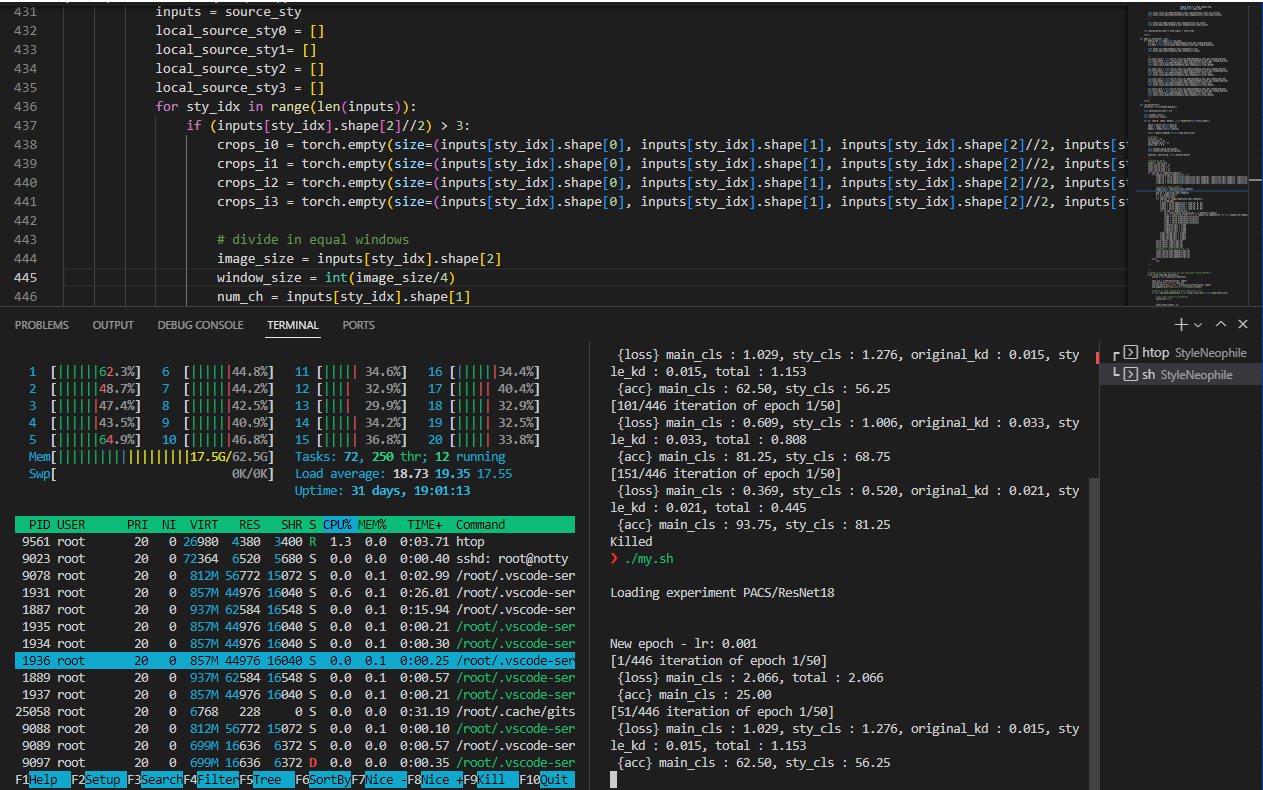

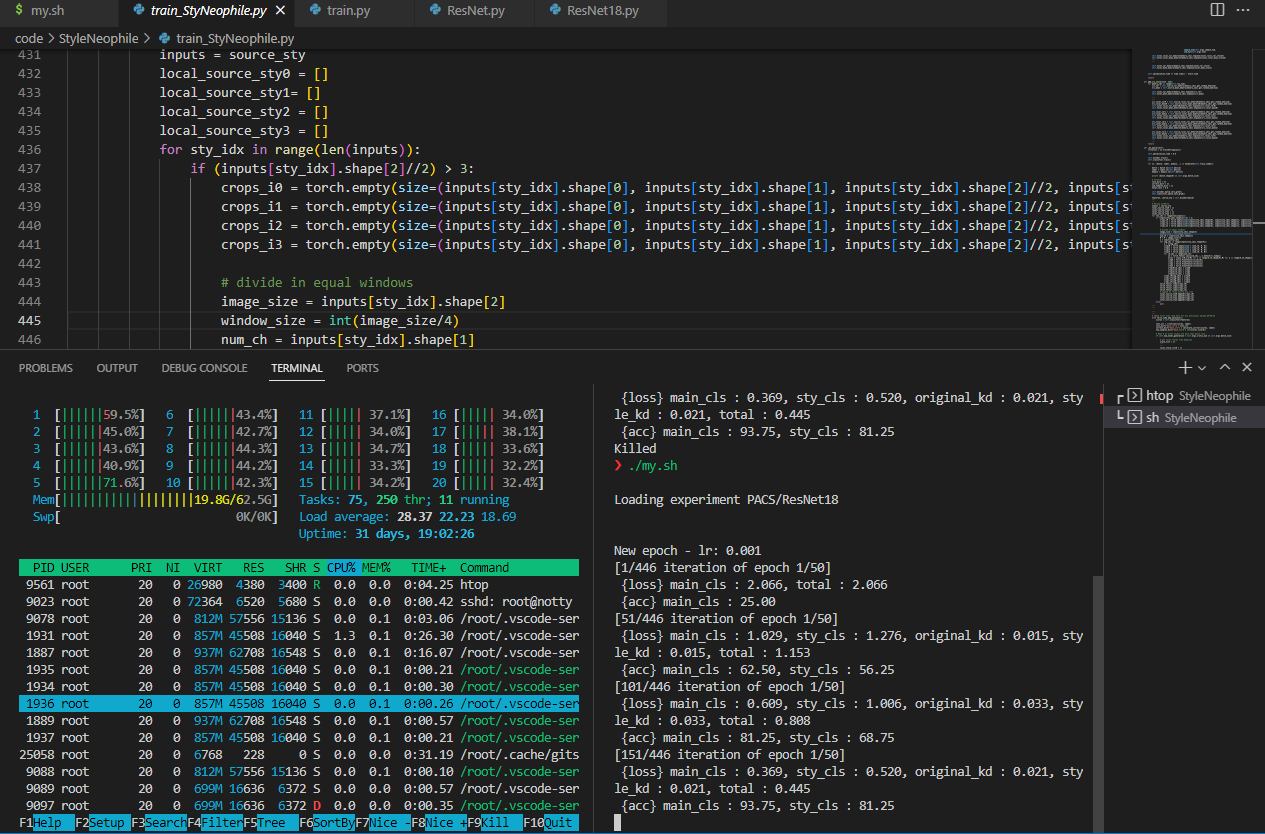

So I tried htop. I am attaching screenshots in increasing time. (this happens even with batch size 1)