Earlier I found a discussion here https://discuss.pytorch.org/t/conda-pytorch-cuda-12-for-arm64-and-grace-hopper/203285, I wonder when the official ARM+CUDA PyTorch support will be on live? At least at the moment I still can’t get it from conda install.

ARM+CUDA torch pip wheels are supported as nightly binaries (including vision and audio).

hi ptrblck

Is the nightly version unable to use CUDA on certain GPUs?

python3 -c “import torch; print(torch.version); print(torch.version.cuda); print(torch.randn(1).cuda())”

2.6.0.dev20241022+cu124

12.4

/usr/local/lib/python3.10/dist-packages/torch/cuda/__init__.py:235: UserWarning:

Tesla T4 with CUDA capability sm_75 is not compatible with the current PyTorch installation.

The current PyTorch install supports CUDA capabilities sm_50 sm_80 sm_86 sm_89 sm_90 sm_90a.

If you want to use the Tesla T4 GPU with PyTorch, please check the instructions at https://pytorch.org/get-started/locally/

warnings.warn(

Traceback (most recent call last):

File "<string>", line 1, in <module>

File "/usr/local/lib/python3.10/dist-packages/torch/_tensor.py", line 566, in __repr__

return torch._tensor_str._str(self, tensor_contents=tensor_contents)

File "/usr/local/lib/python3.10/dist-packages/torch/_tensor_str.py", line 708, in _str

return _str_intern(self, tensor_contents=tensor_contents)

File "/usr/local/lib/python3.10/dist-packages/torch/_tensor_str.py", line 625, in _str_intern

tensor_str = _tensor_str(self, indent)

File "/usr/local/lib/python3.10/dist-packages/torch/_tensor_str.py", line 357, in _tensor_str

formatter = _Formatter(get_summarized_data(self) if summarize else self)

File "/usr/local/lib/python3.10/dist-packages/torch/_tensor_str.py", line 146, in __init__

tensor_view, torch.isfinite(tensor_view) & tensor_view.ne(0)

RuntimeError: CUDA error: no kernel image is available for execution on the device

CUDA kernel errors might be asynchronously reported at some other API call, so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1

Compile with `TORCH_USE_CUDA_DSA` to enable device-side assertions.

uname -r

4.19.0-arm64-server

Yes, the nightly ARM+CUDA binary currently supports only Grace-Hopper systems.

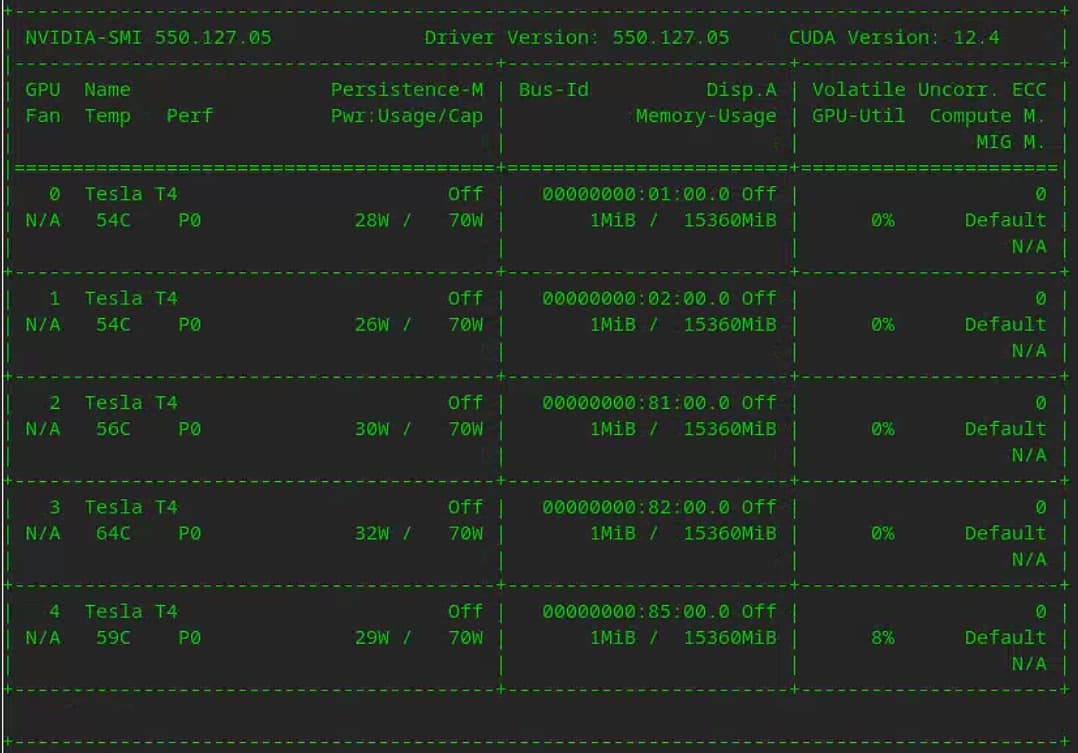

It seems you are using T4 GPUs. Is this a custom built system or which nodes are you using?

Sorry, I’m not very familiar with Grace Hopper systems,

Is that what you’re saying? NVIDIA Grace Hopper Superchip

This seems to be a complete server architecture,

which means that a separately configured CPU+GPU cannot meet the requirements of ARM+CUDA, right?

That’s correct. Right now we do not support other compute capabilities as the builder nodes were already quite slow in building for the Hopper architecture only. However, we could add more architectures if we are seeing usage of it.

@ptrblck is it possible to build from source and add T4 support? I tried setting TORCH_CUDA_ARCH_LIST to 7.5 on an ARM instance and the resulting wheel is missing CUDA support.

Yes, that’s possible. Did you see the CUDA architectures in your build log?

Thanks for responding to my question. I think a dependency was missing. I got it to build successfully for T4 on a new node.