ask for help: I was using pytorch to train a GAN-based model. During the training process, I need to use a sparse tensor, which is calculated by the learnable parameters of the generator, which means that when I update the generator, I need to pass a sparse tensor with gradients to the discriminator, i.e. required_grads=True.

However, when doing backward() on the loss of the generator:

loss_g = generator_loss(pred_label, pred_real, pseudo_label, pseudo_train, is_real)

loss_g.backward()

I have the following problem:

Traceback (most recent call last):

File "C:\Program Files\JetBrains\PyCharm 2021.2\plugins\python\helpers\pydev\pydevd.py", line 1483, in _exec

pydev_imports.execfile(file, globals, locals) # execute the script

File "C:\Program Files\JetBrains\PyCharm 2021.2\plugins\python\helpers\pydev\_pydev_imps\_pydev_execfile.py", line 18, in execfile

exec(compile(contents+"\n", file, 'exec'), glob, loc)

File "D:/myprojects/Mywork/experiment_logs/2022_11_5/gan_demo.py", line 185, in <module>

loss_g.backward()

File "D:\anaconda3\envs\myenv\lib\site-packages\torch\_tensor.py", line 255, in backward

torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)

File "D:\anaconda3\envs\myenv\lib\site-packages\torch\autograd\__init__.py", line 149, in backward

allow_unreachable=True, accumulate_grad=True) # allow_unreachable flag

RuntimeError: sparse tensors do not have strides

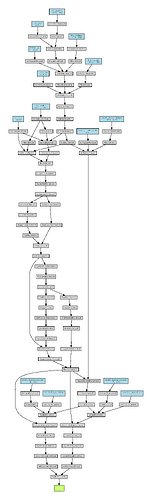

I tried to use the torchviz tool to view the computation graph of the generator loss, and the results are as follows: