Hi,

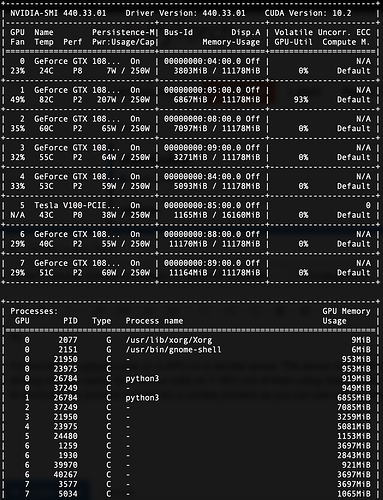

I’m running my PyTorch code on a GPU on a remote server. The server has 8 GPUs and they are shared among multiple users. So I run my code on 1 GPU out of them using DataParallel class. Whenever I try to terminate the process it turns into a zombie process as you can see in the nvidia-smi output here:

All processes with a dash as the process name are killed processes that are still occupying the GPU and can’t be removed unless the system is rebooted which affects all users of the system.

I can’t produce this problem on my machine that has only 1 GPU and the code runs normally with no problems and terminates properly. This problem occurred on the multi-GPU system just last week and I’m not sure whether this was caused by a change in the PyTorch library or is a problem from my side.

If you can give me a fix or check what the problem might be it would be great.

Kind regards,

Ahmed