I tried to run this PyTorch code, it doesn’t show any Epoch and also not showing any errors or warnings, can someone please help?

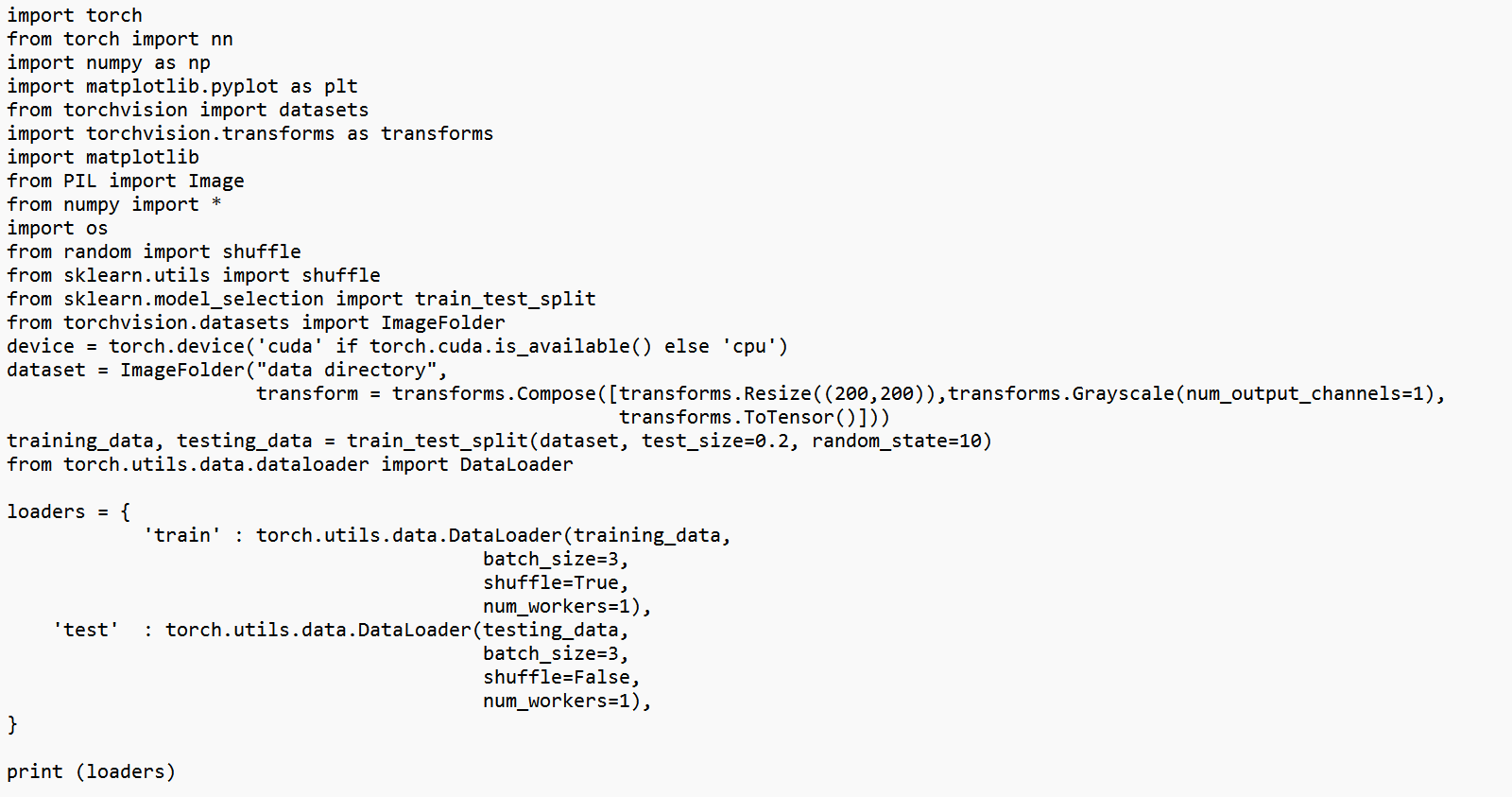

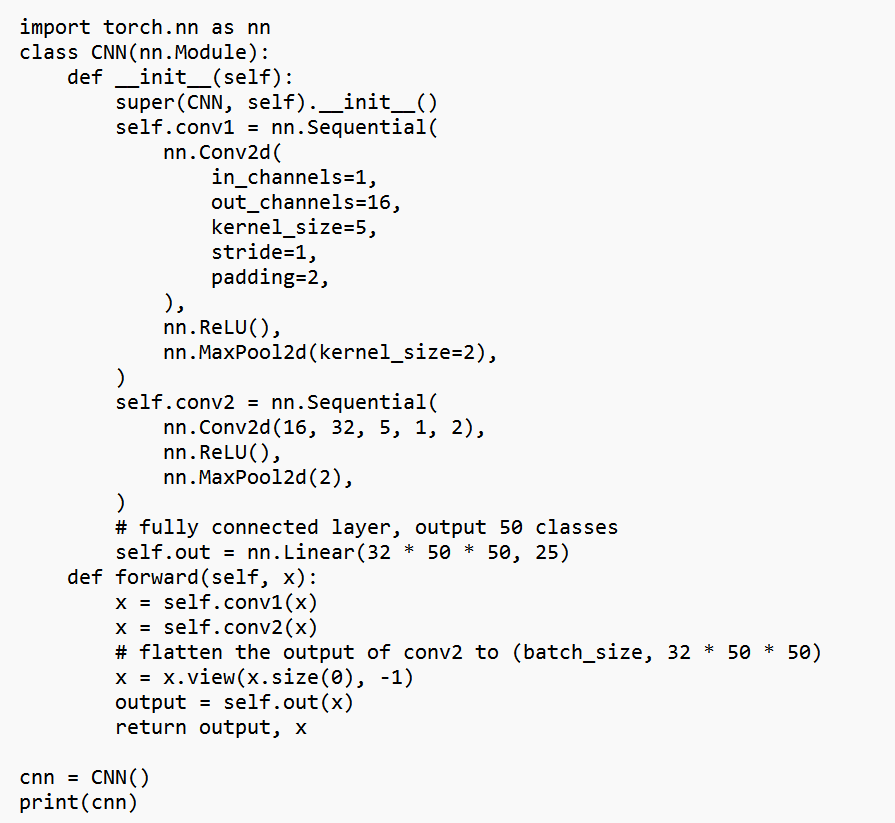

loss_func = nn.CrossEntropyLoss()

loss_func

from torch import optim

optimizer = optim.Adam(cnn.parameters(), lr = 0.01)

optimizer

from torch.autograd import Variable

num_epochs = 20

def train(num_epochs, cnn, loaders):

cnn.train()

# Train the model

total_step = len(loaders['train'])

for epoch in range(num_epochs):

for i, (images, labels) in enumerate(loaders['train']):

# gives batch data, normalize x when iterate train_loader

b_x = Variable(images) # batch x

b_y = Variable(labels) # batch y

output = cnn(b_x)[0]

loss = loss_func(output, b_y)

# clear gradients for this training step

optimizer.zero_grad()

# backpropagation, compute gradients

loss.backward()

# apply gradients

optimizer.step()

if (i+1) % 100 == 0:

print ('Epoch [{}/{}], Step [{}/{}], Loss: {:.4f}'

.format(epoch + 1, num_epochs, i + 1, total_step, loss.item()))

train(num_epochs, cnn, loaders)