I’m trying to setup the environment for the course fast.ai on my local Mac computer which has the NVIDIA GeForce GT 750M, with 386 CUDA cores, which I would like to take advantage of… I already have everything setup, the driver, the cuda toolkit, the cudnn libs, everything is detailed at this link:

the las comment is me… I detail every step I have gone into…

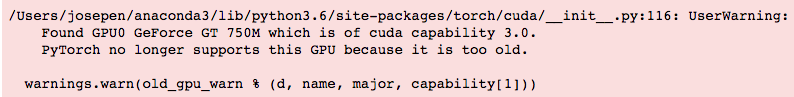

I have no error at compilation and everything is solved… however when execute a line of the fast.ai library I see the following error:

What it means? am I out of luck ?

The video card has 386 CUDA cores, I just want for test and the course if I need something heavy probably will look into another setup to run heavy data computations but for this course, I’d like to have my laptop to run jupyter notebooks, I cannot believe that spite meeting all requirements from nvidia, the CUDA support 3.0, etc… I’m stuck at pytorch unable to process my GPU, can anyone really give me some insight, what is the problem, because so far from NVIDIA side, I’ve meet all requirements and the libraries are installed CUDA Toolkit, Drivers, cuDNN, and pytorch compiled, but I see one error there:

/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu:222:134: warning: self-comparison always evaluates to true [-Wtautological-compare]

switch (memType) { case CUDAHistogramMemoryType::SHARED: (__cudaPushCallConfiguration(grid, block, (CUDAHistogramMemoryType::SHARED == CUDAHistogramMemoryType::SHARED) ? sharedMem : (0), at::globalContext().getCurrentCUDAStream())) ? (void)0 : kernelHistogram1D< output_t, input_t, int64_t, 1, 2, 1, CUDAHistogramMemoryType::SHARED> (aInfo, pInfo, bInfo, binsize, totalElements, getWeightsOp); if (!((cudaGetLastError()) == (cudaSuccess))) { throw Error({__func__, "/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu", 222}, at::str(at::str("cudaGetLastError() == cudaSuccess", " ASSERT FAILED at ", "/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu", ":", 222, ", please report a bug to PyTorch. ", "kernelHistogram1D failed"))); } ; ; break; case CUDAHistogramMemoryType::MULTI_BLOCK: (__cudaPushCallConfiguration(grid, block, (CUDAHistogramMemoryType::MULTI_BLOCK == CUDAHistogramMemoryType::SHARED) ? sharedMem : (0), at::globalContext().getCurrentCUDAStream())) ? (void)0 : kernelHistogram1D< output_t, input_t, int64_t, 1, 2, 1, CUDAHistogramMemoryType::MULTI_BLOCK> (aInfo, pInfo, bInfo, binsize, totalElements, getWeightsOp); if (!((cudaGetLastError()) == (cudaSuccess))) { throw Error({__func__, "/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu", 222}, at::str(at::str("cudaGetLastError() == cudaSuccess", " ASSERT FAILED at ", "/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu", ":", 222, ", please report a bug to PyTorch. ", "kernelHistogram1D failed"))); } ; ; break; default: (__cudaPushCallConfiguration(grid, block, (CUDAHistogramMemoryType::GLOBAL == CUDAHistogramMemoryType::SHARED) ? sharedMem : (0), at::globalContext().getCurrentCUDAStream())) ? (void)0 : kernelHistogram1D< output_t, input_t, int64_t, 1, 2, 1, CUDAHistogramMemoryType::GLOBAL> (aInfo, pInfo, bInfo, binsize, totalElements, getWeightsOp); if (!((cudaGetLastError()) == (cudaSuccess))) { throw Error({__func__, "/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu", 222}, at::str(at::str("cudaGetLastError() == cudaSuccess", " ASSERT FAILED at ", "/Users/josepen/Development/pytorch/aten/src/ATen/native/cuda/SummaryOps.cu", ":", 222, ", please report a bug to PyTorch. ", "kernelHistogram1D failed"))); } ; ; }

Not sure is says: please report a bug to PyTorch.