Hi,

I am using a 2 node distributed setup(each having a single GPU respectively) to train a Neural Network (NN). I utilize PyTorch Data Distributed Parallel with Join Context Manager to achieve this. I am measuring power consumption varying data distribution on those two nodes. I am noticing more power consumption in the node which has a lesser part of the data, e.g.,for a scenario where node 1 training on 20% of the dataset and node2 is training on the rest 80% of data, I am getting higher power consumption in node1 once it finishes training its part. I know how Join Context manager works and it is intuitive why node1 is consuming more power. But there is nothing mentioned in documentation about power consumption. Is this

PyTorch implementation-specific or this is how any synchronous training work (in any framework - PyTorch, Tensorflow etc.) ?

Hi @ptrblck , can you please look into this issue?

I’m unsure how your use case works exactly as it seems node1 might be idling for some time while node2 is still busy based on the data split? How are you measuring the power consumption and did you check if node1 performs any calculations or just waits and potentially spins?

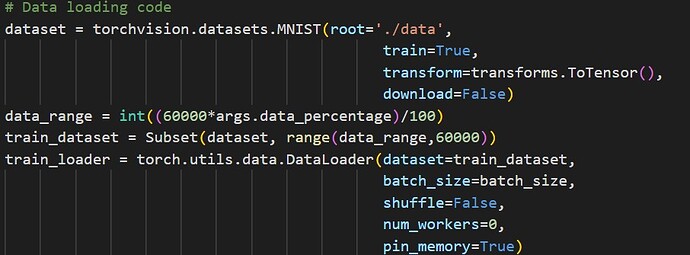

Thanks for the prompt reply. Please find more details here:

Dataset: MNIST (only train)

Model: Convolution networks with 2 convolution layers and a fully connected layer.

Distributed backend: NCCL

World size (number of processess participating in the job): 2

Device: GPU

Setup: two workers on two different machines. Each machine has one GPU respectively (NVIDIA Quadro RTX 8000).

CUDA version: 11.7

Batch size: 100

Used ‘nn.parallel.DistributedDataParallel’ to distribute the model over GPU on those two machine instances.

Join Context Manager (JCM):

Inside the join context manager wrapper, data is loaded into GPU, perform the forward and backward pass. According to the official documentation ([Distributed Training with Uneven Inputs Using the Join Context Manager — PyTorch Tutorials 2.0.1+cu117 documentation),

“]([Distributed Training with Uneven Inputs Using the Join Context Manager — PyTorch Tutorials 2.2.0+cu121 documentation](https://JCM PyTorch)) Join is a context manager to be used around your per-rank training loop to facilitate training with uneven inputs. The context manager allows the ranks that exhaust their inputs early (i.e. join early) to shadow the collective communications performed by those that have not yet joined.”

Power measurement: Collected power draw logs by using command ‘nvidia-smi’ in every 1s.

Experiment result:

For simplicity, let’s start with the uniform scenario where worker1 at machine1 is training over 50% of MNIST dataset (1-30K examples) and worker2 at machine2 is also training the other 50% (30001-60K examples). In this case, total power consumption and peak power consumption in each worker is alike.

Now, for scenario 2, worker1 has 10% of training data while worker2 has the reamining 90%. Here, for join context manager, worker1 waits after finish training its part and performing the shadow collective communication until worker2 finishes its training. According to my logs, total power draw in worker1 is 400W higher than worker2. Moreover, peak power draw in worker1 happens after finishing the training (while it is performing the shadow communication and waiting for worker2 to finish).

I experimented a lot of rounds with different data distribution in worker1 and worker2 and received similar behaviour. In PyTorch documentation, I didn’t any details about this power consumption behaviour. Is it due to how PyTorch implemented JCM ? Or should I change my deployment in some way – to rephrase what is the preferred way for distributed training when data is distributed unevenly among the workers?

This is my first post in PyTorch forum, so might miss details that you may need. Please let me know if you need any more details.

Hi, @ptrblck , I have shared details of my deployment. Can you please have a look?

Hi, @Monzurul_Amin , I’m interested in this issue. Would you like to share you code so I can produce this problem?