Hello There:

Test code as following ,when the “loop” function return to “test” function , the GPU memory was still occupied by python , I found this issue by check “nvidia-smi -l 1” , what I expected is :Pytorch clear GPU memory when “loop” function return , so the GPU resource can be used by other programme.

How to clear GPU memory after “loop” function return ? Thanks in advance for any help you can provide!

from torchvision import models

import time

import gc

import torch

def loop():

with torch.no_grad():

model = models.resnet34()

model=model.cuda()

del model

model=None

gc.collect()

time.sleep(3)

torch.cuda.empty_cache()

def test():

for i in range(10):

time.sleep(5)

print(f"the {i} loop")

loop()

if __name__ == '__main__':

test()

PyTorch uses a memory cache to avoid malloc/free calls and tries to reuse the memory, if possible, as described in the docs.

To release memory from the cache so that other processes can use it, you could call torch.cuda.empty_cache().

EDIT: sorry, just realized that you are already using this approach. I’ll try to reproduce the observation.

EDIT2: the code seems to work fine and on my system only the CUDA context remains on the device.

Hello ptrblck :

When the programme start , first run at

time.sleep(5)

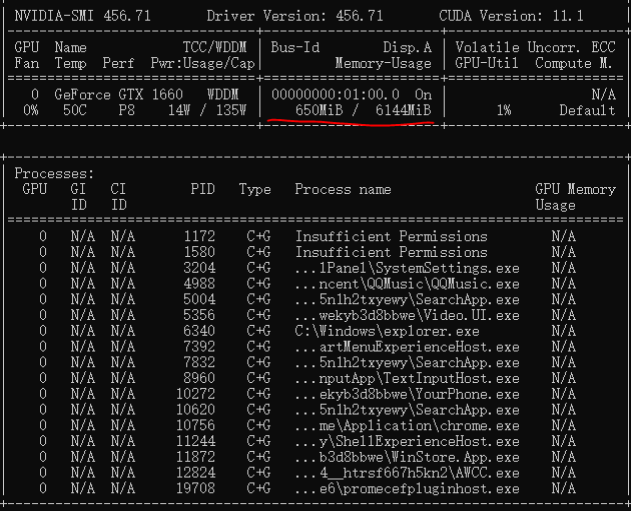

GPU state as following ,only take 650MB

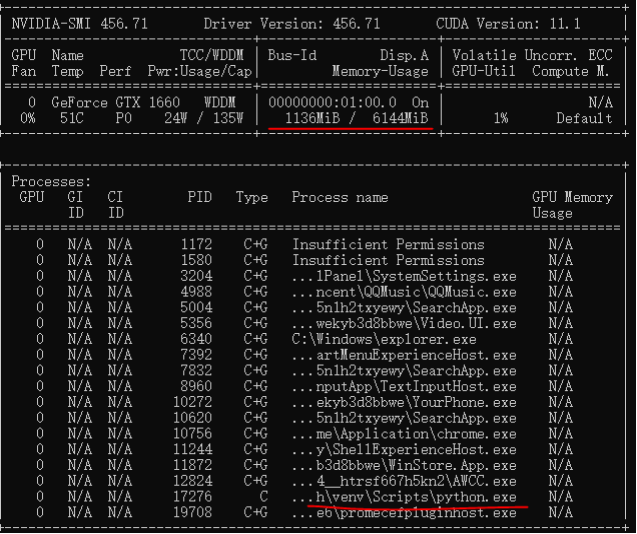

When in loop function ,GPU state as following ,take 1136MiB

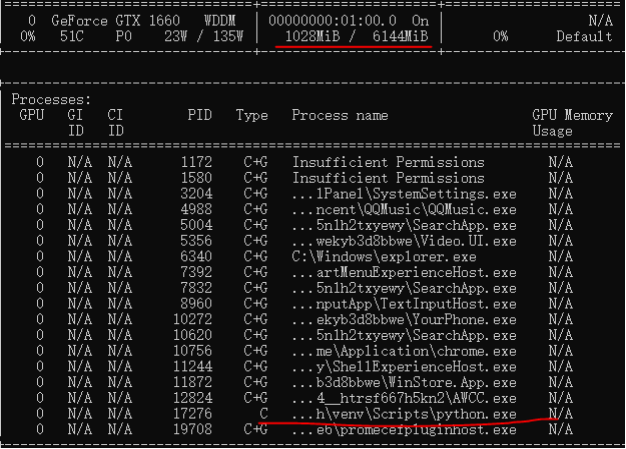

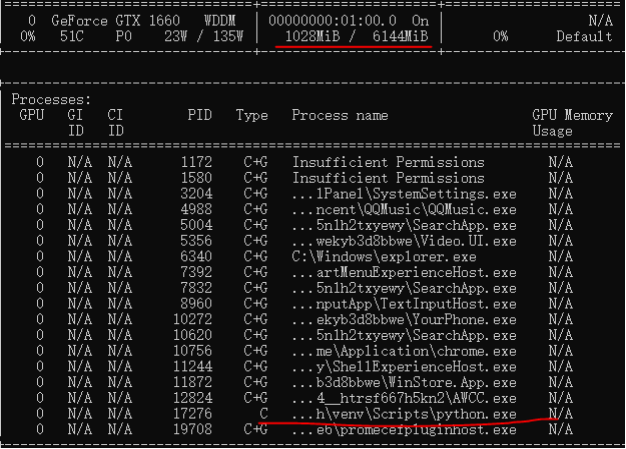

When the loop function return to test function ,python still resident in GPU memory ,take 1028MiB

How to release GPU resource when loop function return , which means no python resident in GPU memory ? Than you.

The remaining memory is used by the CUDA context (which you cannot delete unless you exit the script) as well as all other processes shown in nvidia-smi. You can add print(torch.cuda.memory_summary()) to the code before and after deleting the model and clearing the cache and would see no allocations afterwards:

# before

|===========================================================================|

| PyTorch CUDA memory summary, device ID 0 |

|---------------------------------------------------------------------------|

| CUDA OOMs: 0 | cudaMalloc retries: 0 |

|===========================================================================|

| Metric | Cur Usage | Peak Usage | Tot Alloc | Tot Freed |

|---------------------------------------------------------------------------|

| Allocated memory | 86183 KB | 86183 KB | 86183 KB | 0 B |

| from large pool | 80128 KB | 80128 KB | 80128 KB | 0 B |

| from small pool | 6055 KB | 6055 KB | 6055 KB | 0 B |

|---------------------------------------------------------------------------|

| Active memory | 86183 KB | 86183 KB | 86183 KB | 0 B |

| from large pool | 80128 KB | 80128 KB | 80128 KB | 0 B |

| from small pool | 6055 KB | 6055 KB | 6055 KB | 0 B |

|---------------------------------------------------------------------------|

| GPU reserved memory | 110592 KB | 110592 KB | 110592 KB | 0 B |

| from large pool | 102400 KB | 102400 KB | 102400 KB | 0 B |

| from small pool | 8192 KB | 8192 KB | 8192 KB | 0 B |

|---------------------------------------------------------------------------|

| Non-releasable memory | 24409 KB | 26469 KB | 77787 KB | 53378 KB |

| from large pool | 22272 KB | 24320 KB | 71296 KB | 49024 KB |

| from small pool | 2137 KB | 2183 KB | 6491 KB | 4354 KB |

|---------------------------------------------------------------------------|

| Allocations | 218 | 218 | 218 | 0 |

| from large pool | 19 | 19 | 19 | 0 |

| from small pool | 199 | 199 | 199 | 0 |

|---------------------------------------------------------------------------|

| Active allocs | 218 | 218 | 218 | 0 |

| from large pool | 19 | 19 | 19 | 0 |

| from small pool | 199 | 199 | 199 | 0 |

|---------------------------------------------------------------------------|

| GPU reserved segments | 9 | 9 | 9 | 0 |

| from large pool | 5 | 5 | 5 | 0 |

| from small pool | 4 | 4 | 4 | 0 |

|---------------------------------------------------------------------------|

| Non-releasable allocs | 6 | 7 | 9 | 3 |

| from large pool | 3 | 4 | 5 | 2 |

| from small pool | 3 | 4 | 4 | 1 |

|===========================================================================|

# after

|===========================================================================|

| PyTorch CUDA memory summary, device ID 0 |

|---------------------------------------------------------------------------|

| CUDA OOMs: 0 | cudaMalloc retries: 0 |

|===========================================================================|

| Metric | Cur Usage | Peak Usage | Tot Alloc | Tot Freed |

|---------------------------------------------------------------------------|

| Allocated memory | 0 B | 86183 KB | 86183 KB | 86183 KB |

| from large pool | 0 B | 80128 KB | 80128 KB | 80128 KB |

| from small pool | 0 B | 6055 KB | 6055 KB | 6055 KB |

|---------------------------------------------------------------------------|

| Active memory | 0 B | 86183 KB | 86183 KB | 86183 KB |

| from large pool | 0 B | 80128 KB | 80128 KB | 80128 KB |

| from small pool | 0 B | 6055 KB | 6055 KB | 6055 KB |

|---------------------------------------------------------------------------|

| GPU reserved memory | 0 B | 110592 KB | 110592 KB | 110592 KB |

| from large pool | 0 B | 102400 KB | 102400 KB | 102400 KB |

| from small pool | 0 B | 8192 KB | 8192 KB | 8192 KB |

|---------------------------------------------------------------------------|

| Non-releasable memory | 0 B | 45102 KB | 134589 KB | 134589 KB |

| from large pool | 0 B | 39552 KB | 123136 KB | 123136 KB |

| from small pool | 0 B | 6851 KB | 11453 KB | 11453 KB |

|---------------------------------------------------------------------------|

| Allocations | 0 | 218 | 218 | 218 |

| from large pool | 0 | 19 | 19 | 19 |

| from small pool | 0 | 199 | 199 | 199 |

|---------------------------------------------------------------------------|

| Active allocs | 0 | 218 | 218 | 218 |

| from large pool | 0 | 19 | 19 | 19 |

| from small pool | 0 | 199 | 199 | 199 |

|---------------------------------------------------------------------------|

| GPU reserved segments | 0 | 9 | 9 | 9 |

| from large pool | 0 | 5 | 5 | 5 |

| from small pool | 0 | 4 | 4 | 4 |

|---------------------------------------------------------------------------|

| Non-releasable allocs | 0 | 9 | 16 | 16 |

| from large pool | 0 | 4 | 9 | 9 |

| from small pool | 0 | 6 | 7 | 7 |

|===========================================================================|