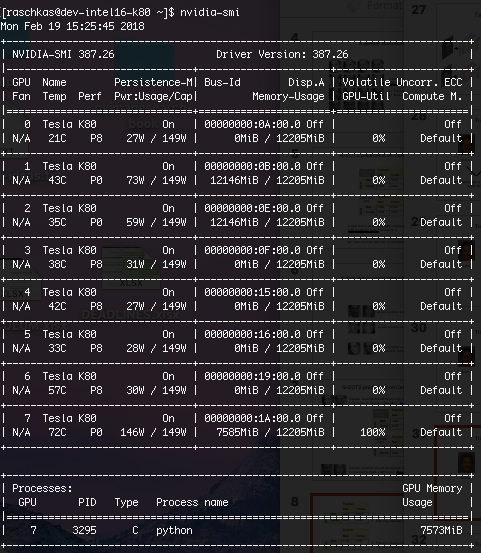

Hm, that’s interesting! There are 8 Tesla K80’s in that rack, and I just checked, the two ones where I “crashed” the PyTorch code (via RuntimeError: cuda runtime error (2) : out of memory) still occupy that memory (GPU 1 & 2, GPU 7 is the one I am currently using)

1 Like